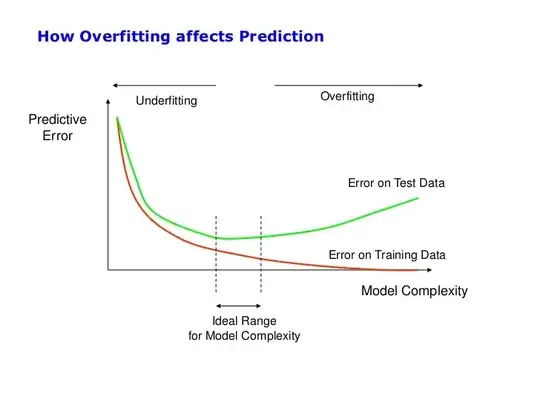

Increasing complexity of models results in over-fitting of the training data, is the inverse true too? Assuming that my chosen classifier is able to fully fit the training set (i.e 100% accuracy), should I then search for the parameters that produces the least complex model but yet retaining 100% accuracy for the training set? Will this generally be the best generalization for an unseen test set? Or it depends? Or we can't tell and we should use CV to find the best parameters? This dilemma often happens when I try to choose the best number of hidden nodes for my neural nets.

However, usually for my test set, my test error is shifted much further to the right as seen in the graph below. Point A is the ideal complexity. Point B is the least complexity where the train set does not underfit. From the first graph, point B is right of point A, whereas from my experiments I often get point A right of point B. Just wondering is there any explanation why?