I have an issue which I would like to seek some feedback on:

In conventional machine learning we attempt to maximise the accuracy and other metrics of our models, and are generally satisfied with over 80% accuracy.

Problem

However, what if the requirement is instead:

For any new input, if you're absolutely certain of predicting it correctly, then predict. Else, fallback to conventional systems

Intuitively, one would use the probability scores as a way of 'confidence' of the prediction. But what if the probability scores are wrong? eg, predicted a 99% probability of label A, but real answer is label B.

Is there a way to tackle such a problem? I suspect there is something wrong with the data, but we'll never have perfect data, so is there a way to solve this with the existing data?

Attempts

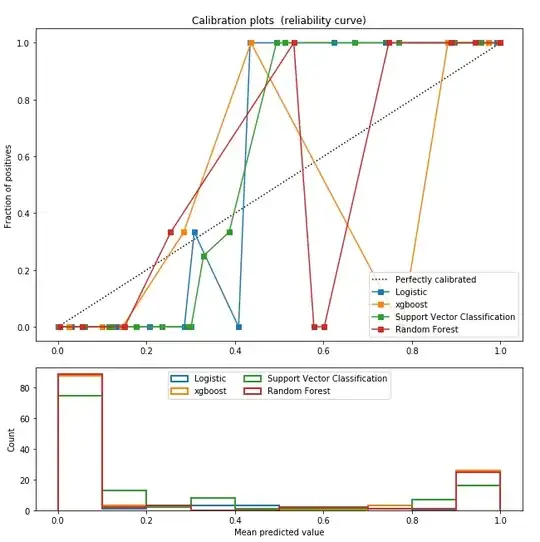

I have tried calibrating the probabilities, but they do not seem to help much. I obtain the following calibration reliability curve:

I have also tried ensembling, but again, it doesn't solve the problem at hand as all the classifiers are certain when predicting the wrong answer.

This has really stumped me the past few days. Would appreciate your insights on this :)