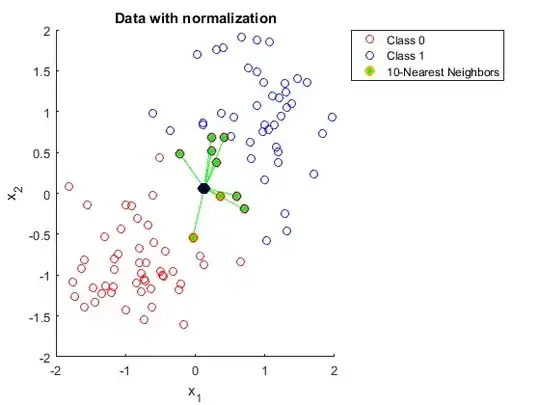

Could someone please explain to me why you need to normalize data when using K nearest neighbors.

I've tried to look this up, but I still can't seem to understand it.

I found the following link:

https://discuss.analyticsvidhya.com/t/why-it-is-necessary-to-normalize-in-knn/2715

But in this explanation, I don't understand why a larger range in one of the features affects the predictions.