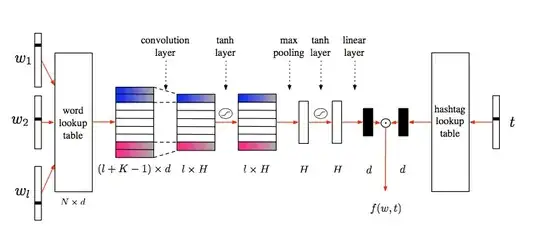

I have been reading a Facebook paper, read here, and am confused about certain features of the architecture. I do not understand why they have a tanh layer, max-pooling layer, and then another tanh layer. I understand what each layer does, but I don't understand why they have this sequence. Wouldn't this output basically give the same as just a max-pooling layer, and a tanh layer?

Asked

Active

Viewed 519 times

1 Answers

1

Tanh layers include their own weight matrices. Max pooling selects elements from different "channels." So the order of operations in the tanh-max-tanh pipeline is

- linear transformation

- $\text{tanh}$

- select elements according to max pooling, discarding the rest

- linear transformation

- $\text{tanh}$

That is, the "down-selecting" to the max pooling elements means that you're looking at a different set of vectors under linear combination than you would in the alternative max pool-tanh case.

Finally, remember that the linear combinations can reverse the sign of the inputs! This is important because if you flip the sign of inputs to the max pooling, you'll get different results.

Sycorax

- 76,417

- 20

- 189

- 313