Sorry if this is a really basic question as I am new to the field.

My question builds off of another question "subscript notation in expectations" found here, but that question does not address pipes or commas in the subscript: Subscript notation in expectations

This question is very similar but the author basically just asks if their math is correct, so there is no explanation like what I'm hoping for: Conditional expectation subscript notation

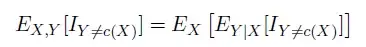

So, on to my question. In the below equation, how should we interpret the meaning of the commas and pipes in the subscripts? I am guessing that the pipes indicate conditional expectation, but I'm not sure what to think about the commas.

As background, the equation is discussing classification error. I is an indicator function and c is a classifier. I believe that I and its subscript would be read as, "an indicator function that is true when the classifier c(X) is not equal to Y, i.e. when the classifier is wrong."

It is taken from the ML paper "Training Highly Multiclass Classiers" by Gupta, Bengio, and Weston. http://jmlr.org/papers/v15/gupta14a.html (bottom of page 1463)