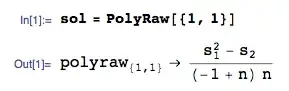

Although I saw a few similar threads, I don't believe I saw the specific answer to the following question:

For simple linear or multiple linear regression, if your dependent variable is a percentage, are any assumptions violated? I know that Y should be continuous, but does it also technically have to be unbounded? I've never seen this listed as one of the assumptions, though I understand how a bounded dependent variable can cause specific issues.

In my case, I'm doing a multiple regression project for school where the dependent variable is percentage of obese schoolchildren. Should I do a logit transformation or beta-regression because Y is bounded?

In response to a comment: the kernel density plot for Y(pct_obese) is below: It doesn't seem that there is bunching at the boundaries--rather, the bulk of the data hovers around 20%: