Let $Y_{ij} \stackrel{d}{=} N(\mu_i, \sigma^2)$ for $i\in \{1,\ldots,n\}, j\in\{1,2\}$. Also assume $Y_{i1}$ independant of $Y_{i2}$. The parameter of interest is $\sigma^2$.

Setting up the loglikelihood and deriving the mle's one finds: $$\hat \mu_i =\dfrac{Y_{i1}+Y_{i2}}{2} \qquad \hat{\sigma^2} = \dfrac{1}{4n}\sum_{i}(Y_{i1}-Y_{i2})^2$$

One can easily verify that this estimator is biased, because $$E[\hat{\sigma^2}] = \dfrac{1}{4n}\sum_i E[(Y_{i1}-Y_{i2})^2] = \dfrac{\sigma^2}{2}$$ (since $Y_{i1}-Y_{i2}\stackrel{d}{=}N(0,2\sigma^2)$

I have done all the derivations, but I don't really see what the problem is. This bias is easily resolved by considering a new estimator $\tilde{\sigma^2} = 2\hat{\sigma^2}$.

In all literature I've consulted the bias above is used to consider a transformed variable $V_i = \frac{Y_{i1}-Y_{i2}}{\sqrt{2}}$ which is distributed as $N(0,\sigma^2)$ and removes the nuisance parameters. This results in an unbiased estimator for $\sigma^2$ ($\hat{\sigma^2} = \frac{\sum_i v_i^2}{n}$) with greater variance. (loss of information)

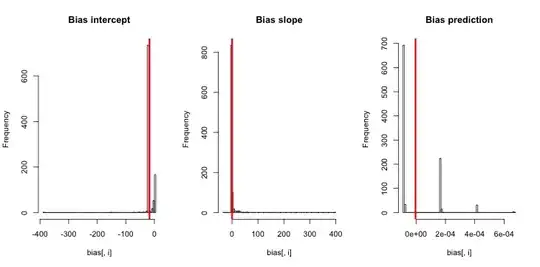

The figure below shows a simulation study using $\mu_i \stackrel{d}{=} N(0,2)$ and $sigma^2 =1$

Question

Could someone explain the 'problem' in the Neyman-Scott problem? The bias doesn't seem like a real problem since $2\cdot \hat{\sigma^2}$ resolves the issue, without having to consider the proposed transformation. This unbiased estimator is exactly the same as the marginal mle.