In linear regression, the degrees of freedom of the residuals is:

$$ \mathit{df} = n - k^*$$

Where $k^*$ is the numbers of parameters you're estimating INCLUDING an intercept. (The residual vector will exist in an $n - k^*$ dimensional linear space.)

If you include an intercept term in a regression and $k$ refers to the number of regressors not including the intercept then $k^* = k + 1$.

Notes:

- It varies across statistics texts etc... how $k$ is defined, whether it includes the intercept term or not.)

- My notation of $k^*$ isn't standard.

Examples:

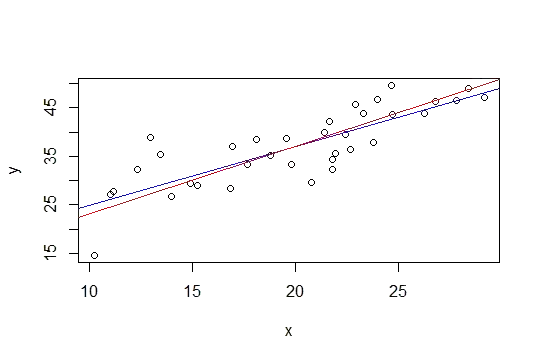

Simple linear regression:

In the simplest model of linear regression you are estimating two parameters:

$$ y_i = b_0 + b_1 x_i + \epsilon_i$$

People often refer to this as $k=1$. Hence we're estimating $k^* = k + 1 = 2$ parameters. The residual degrees of freedom is $n-2$.

Your textbook example:

You have 3 regressors (bp, type, age) and an intercept term. You're estimating 4 parameters and the residual degrees of freedom is $n - 4$.