I tried to add a new dimension to the discussion and make it more general. Please excuse me if was too rudimentary.

A regression model is a formal means of expressing the two essential ingredients of a statistical relation:

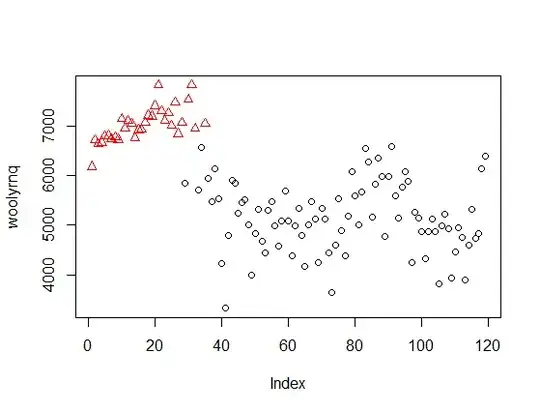

- A tendency of the response variable $Y$ to vary with the predictor variable $X$ in a systematic fashion.

- A scattering of points around the curve of statistical relationship.

How do we get a handle on the response variable $Y$?

By postulating that:

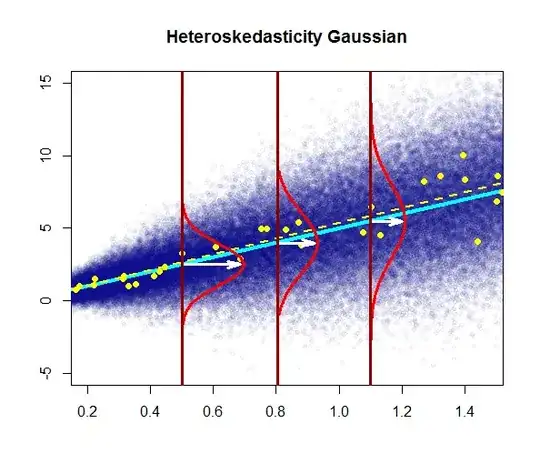

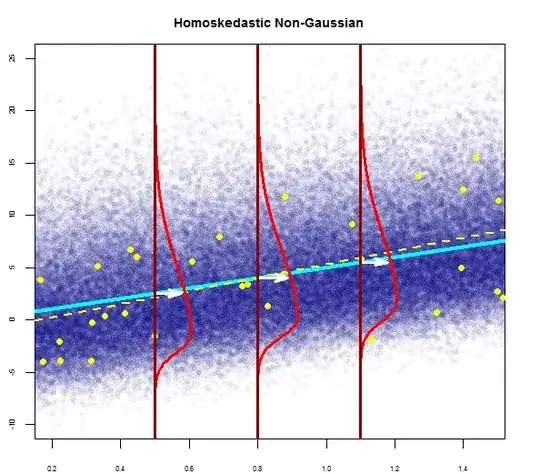

There is a probability distribution of $Y$ for each level of $X$.

The means of these probability distributions vary in some systematic fashion with $X$.

Regression models may differ in the form of the regression function (linear, curvilinear), in the shape of the probability distributions of $Y$ (symmetrical, skewed), and in other ways.

Whatever the variation, the concept of a probability distribution of $Y$ for any given $X$ is the formal counterpart to the empirical scatter in a statistical relation.

Similarly, the regression curve, which describes the relation between the means of the probability distributions of $Y$ and the level of $X$, is the counterpart to the general tendency of $Y$ to vary with $X$ systematically in a statistical relation.

Source : Applied Linear Statistical Models, KNNL

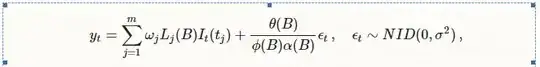

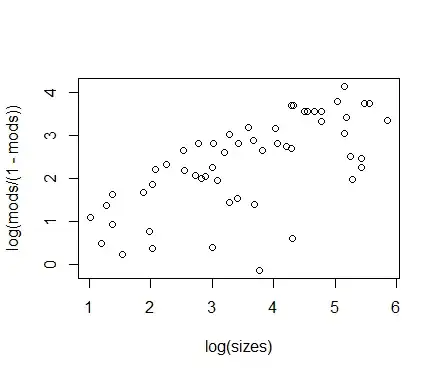

In Normal Error Regression model we try to estimate the conditional distribution of mean of $Y$ given $X$ that is written like below:

$$Y_i = \beta_0\ + \beta_1X_i + \epsilon$$

where:

$Y_i$ is the observed response

$X_i$ is a known constant, the level of the predictor variable

$\beta_0\\$ and $\beta_1\\$ are parameters

$\epsilon\\$ are independent $N(O,\sigma^2)$

$i$ = 1, ... ,n

So, to estimate $E(Y|X)$ we need to estimate the three parameters which are: $\beta_0\\$, $\beta_1\\$ and $\sigma^2$. We can find that by taking the partial derivative of the likelihood function w.r.t. $\beta_0\\$, $\beta_1\\$ and $\sigma^2$ and equating them to zero. This becomes relatively easy under the assumption of normality.

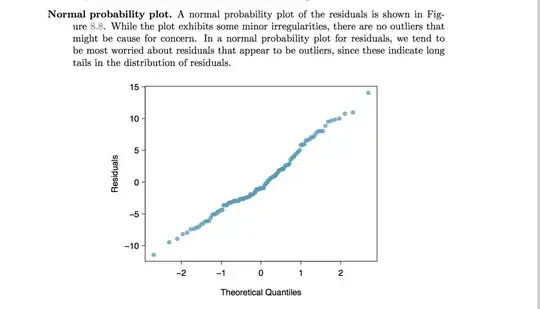

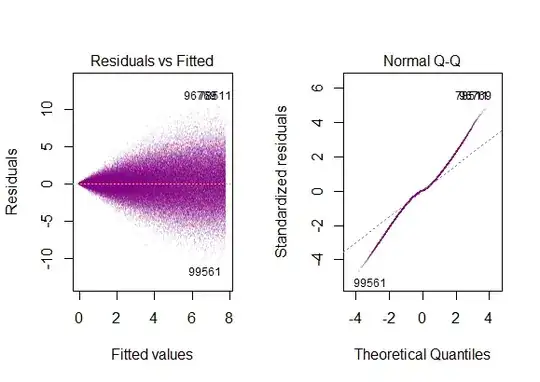

the residuals of the model are nearly normal,

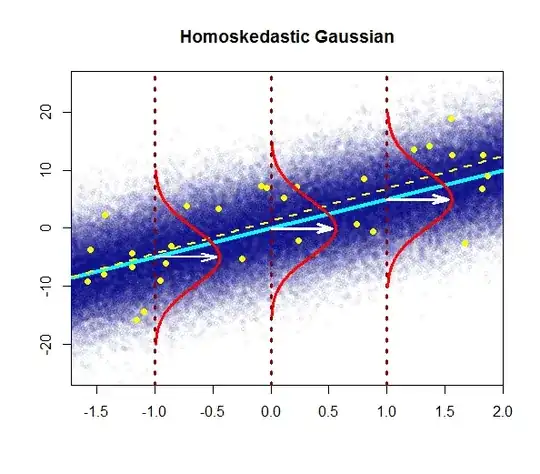

the variability of the residuals is nearly constant

the residuals are independent, and

each variable is linearly related to the outcome.

How are 1 and 2 different?

Coming to the question

The first and second assumptions as stated by you are two parts of the same assumption of normality with zero mean and constant variance. I think the question should be posed as what are the implications of the two assumptions for a normal error regression model rather than the difference between the two assumptions. I say that because it seems like comparing apples to oranges because you are trying to find a difference between assumptions over the distribution of a scatter of points and assumptions over its variability. Variability is a property of a distribution. So I will try to answer more relevant question of the implications of the two assumptions.

Under the assumption of normality the maximum likelihood estimators(MLEs) are the same as the least squares estimators and the MLEs enjoy the property of being UMVUE which means they have minimum variance among all estimators.

Assumption of homoskedasticity lets one set up the interval estimates for the parameters $\beta_0\\$ and $\beta_1\\$and make significance tests. $t$-test is used to check for statistical significance which is robust to minor deviations from normality.