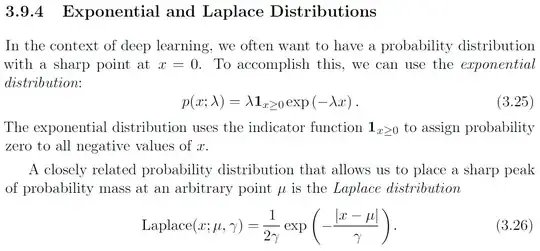

I am reading Ian Goodfellow's book about deep learning and when it introduces exponential distribution, it says "In the context of deep learning, we often want to have a probability distribution with a sharp point at x=0." I don't understand why the probability distribution should have this character, does it help to train the model?

-

Neither density is differentiable at the cusp point ($0$ for the first, $\mu$ for the second). You can see the sharpness from a plot. Why this is useful in deep learning, I have no idea. I can't really help you there. But also, I take issue with the author's use of the word "mass." – Taylor Apr 26 '17 at 03:57

-

@Taylor "mass" is standard terminology in probability. – nth Apr 26 '17 at 04:38

-

@Taylor I think "mass" is a typo. The correct one should be "density" because Laplace distribution is a continuous probability distribution. – apepkuss Sep 11 '17 at 15:48

-

1cf the relationship between lasso and pinning some coefficient estimates at exactly 0. – Sycorax Sep 11 '17 at 15:52

-

1In what context is the distribution used? – Miguel Oct 30 '17 at 17:47

1 Answers

These two distribution have a connection with deep learning via regularisation. In deep learning we are often concerned with regularising the parameters of a neural network because neural networks tend to overfit and we want to improve ability of the model to generalise to new data.

From a Bayesian perspective, fitting a regularised model can be interpreted as computing the maximum a posteriori (MAP) estimate given a specific prior distribution over the weights $w_i$. In particular,

- the $L^2$ (a.k.a. weight decay) norm corresponds to a Gaussian prior on the weights $w$, and

- the $L^1$ norm corresponds to an isotropic Laplace prior over the weights $w$.

The $L^1$ norm (a.k.a. the Laplace prior on weights) by virtue of it's sharpness encourages sparsity (many zeros) in $w$ for reasons explained here: Why L1 norm for sparse models . This type of regularisation can be quite desirable. The connection between the Laplacian distribution and the $L^1$ norm is explained in more detail here: Why is Lasso penalty equivalent to the double exponential (Laplace) prior?

Most of what I have mentioned here is discussed in more detail in the same "Deep Learning" book in section 5.6.1 and section 7.1.2.

- 2,686

- 1

- 17

- 29