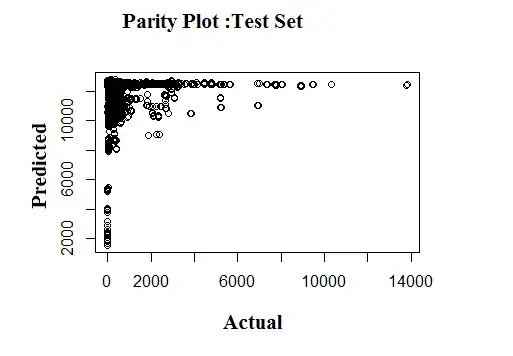

I am using Random Forest to predict a target variable from about 20 independent variables in a 40000 entry database. As you see from parity plots of test set, I have sever overfit and probably other problems. The fact that I have some overerestimation in smaller target variables in the training set's parity plot shows me that there is imbalance and outlier effect (I think). The fact that in the test set parity plot we have various points in a complete horizontal line also shows lack of unique characterization of the system. My question is how to tackle these problems? It is possible for me to extract more and more variables (so 20 independent variables are not fixed and I can extract more). How should I approach variable extraction? Should I change modeling approach like tuning parameters? Any type of systematic guideline or technical keyword to look into will be appreciated.

1 Answers

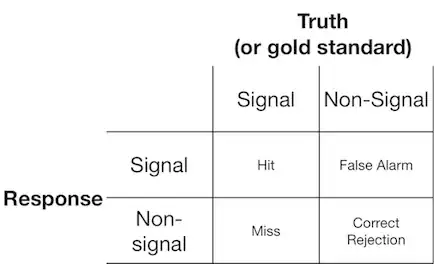

You are likely identifying at least one of the problems correctly, there seems to be an imbalance, although it is not easy to see how bad it is: a density map plot would be more fitting for this visualization (or a histogram of actual data), for all we know it could be 39.9K zeros;) I would try to treat it as a classification problem, 2 or 3-class if needed, with and without adjustments for class imbalance to see how much that affects OOB error rates. This Chen et al. report provides a nice overview of approaches for dealing with unbalanced designs. In practice, in my experience, in randomForest, sampsize= c(x,y) where x, y=n samples in the two classes, subsampled to the minority class n, works better than class weights.

The effect of outliers is very easy to check for by removing them, although it does not look like it would affect that OE line. More on testing for outlier importance here.

It is unlikely to be an over-fitting problem (as long as you have sufficient ntree), but in any case extracting more predictors is unlikely to solve test data over-fitting. However, the suggested approach to extracting predictors is somewhat contrary to the point of RF, it seems more reasonable to start with the fullest set of all reasonable predictors and, if needs be, weed out the ones which seem to reduce the fit.

- 2,084

- 8

- 11