I would like to know how does one go about to implement softmax in a neural network. I know that softmax is the exponential divided by the sum of exponential of the whole Y vector which is applied at output layer. Does this mean I do the softmax function to the vector after the processing in hidden layer? If yes, what does this softmax do? Isn't it just like multiply a scale to the vector?

-

Check https://stats.stackexchange.com/questions/79454/softmax-layer-in-a-neural-network?rq=1 – luchonacho Apr 17 '17 at 07:42

1 Answers

Softmax is applied to the output layer, and its application introduces a non-linear activation. It is not a strict necessity for it to be applied - for instance, the logits (or preactivation, $z_j =\mathbf w_j^\top \cdot \mathbf x$), values could be used to reach a classification decision.

What is the point then? From an interpretative standpoint, softmax yields positive values, adding up to one, normalizing the output in a way that can be read as a probability mass function. Softmax provides a way to spread the values of the output neuronal layer.

Softmax has a nice derivative with respect to the preactivation values of the output layer $(z_j)$ (logits): $\small{\frac{\partial}{\partial( \mathbf{w}_i^\top \mathbf x)}}\sigma(j)=\sigma(j)\left(\delta_{ij}-\sigma(i)\right)$.

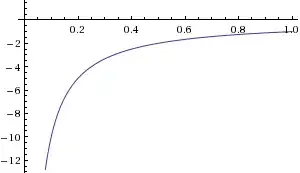

Further, the right cost function for softmax is the negative log likelihood (cross-entropy), $\small C =-\displaystyle \sum_K \delta_{kt} \log \sigma(k)= -\log \sigma(t) = -\log \left(\text{softmax}(t)\right)$, which derivative with respect to the activated output values is $\frac{\partial \,C}{\partial\,\sigma(i)}=-\frac{\delta_{it}}{\sigma(t)}$:

providing a very steep gradient in cost when the output (activated values) are very far from $1$. This gradient, which allows the weights to be adjusted throughout the training phase, would simply not be there if we didn't apply the softmax activation to the logits - we would be using the mean squared error cost function.

Combining these two derivatives, and applying the chain rule

$$\small \frac{\partial C}{\partial z_i}=\frac{\partial C}{\partial(\mathbf{w}_i^\top \mathbf x)}=\sum_K \frac{\partial C}{\partial \sigma(k)}\frac{\partial \sigma(k)}{\partial z_k}$$

...results in a very simple and practical derivative: $\frac{\partial}{\partial z_i}\;-\log\left( \sigma(t)\right) =\sigma(i) - \delta_{jt}$ used in backpropagation during training. This derivative is never more than $1$ or less than $-1$, and it gets small when the activated output is close to the right answer.

References:

The softmax output function [Neural Networks for Machine Learning] by Geoffrey Hinton

Coursera NN Course by Geoffrey Hinton - assignment exercise

Neural networks [2.2] and [2.3] : Training neural networks - loss function Hugo Larochelle's

- 23,430

- 15

- 100

- 197