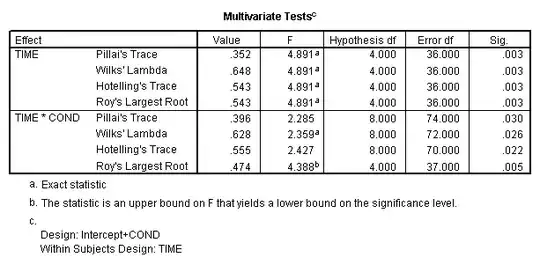

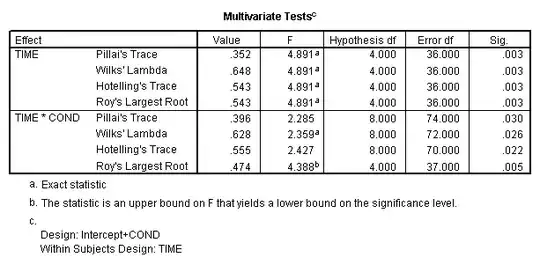

As the direction of the arrows in the image below indicates, independence implies mean independence, which in turn implies zero correlation. The converse statements are not true: zero correlation does not imply mean independence, which in turn doesn't imply independence.

Intuitively, independence of u and x would mean that for each value of x, the conditional density function of u given x is identical. Mean independence is less restrictive as it is a one number summary of the values of u, for each level of x. To be more exact, mean independence between u and x would mean that for each value of x, a one number summary of the values of u, the average weighted by the conditional density function of u given x, is constant. You can now see intuitively that while the conditional density function of u given x may be different across different values of x, a one number summary of the conditional density function of u given x can be the same across all levels of x. This would be the case when u and x are not independent but are mean independent.

You ask "Why can't we simply say that u is independent of x? Is it possible to have u completely independent of x?" The answers to your questions are 1) Because mean independence suffices for our purposes, and 2) Yes, but we wouldn't want to do that. Let me expand on each of these.

The context of the assumption you reference, E(u│x)=E(u), is that of Assumptions for Simple Regression, and the u stands for the error term, or equivalently the factors that affect our dependent variable y, that are not captured by x. When combined with the assumption E(u)=0, a trivial one as long as an intercept is included in the regression, we obtain that E(u│x)=0. We care about this result because it implies that the population regression line is given by the conditional mean of y:

E(y|x)=β0 + β1*x.

If we were conducting a randomized controlled experiment, we would make sure to assign the values of x randomly, which would make x and u independent, which would then imply the weaker condition of mean independence, which would in turn imply zero correlation. In a sense, in a randomized controlled experiment, we get the independence of x and u for free. In contrast, in observational data we must assume how the unobserved factors, u, are related in the population to the factor we control for, x.

We could assume that x and u are independent, however that is too strong of an assumption. That would be equivalent to assuming that for each level of x, the conditional probability density function of u given x would be exactly the same and equal to the marginal probability density function of u. By assuming that the conditional probability density function of u given x would be exactly the same for each level of x, we would be assuming that all the moments of the distribution of u are exactly the same for each level of x. We would therefore be assuming that the first and second moments of the distribution of u would also be identical for each level of x. The latter of these is precisely the assumption of homoscedasticity, which says that the variance of u does not depend on the value of x:

Therefore, if we were to assume that x and u were independent, we'd be assuming homoscedasticity, and a lot more (think of the moments higher than 2). However, homoscedasticity, for one, is not as key of an assumption, as it has no bearing on the unbiasedness of the regression coefficients, so we'd want it as a separate assumption that we may or may not want to make. We wouldn't want this "nice to have" assumption mixed in with the most vital assumption of OLS, E(u│x)=E(u).

At the other extreme, we could assume that x and u are uncorrelated. In fact, that suffices for deriving the OLS estimates. However, that would not be sufficient to conclude that the population regression is given by the conditional mean E(y|x)=β0 + β1*x.

Good lecture notes that I used for inspiration: http://statweb.stanford.edu/~adembo/math-136/Orthogonality_note.pdf