I need to predict the impact of a set of node failures in a network, based on 2 features: the fraction of failed nodes and a measure of their network centrality.

Failure of less important nodes will have low to no repercussions, while failure of important nodes can bring down the whole network.

The predicted output should be a number in the range [0, 1], where 0 is "no damage", and "1" is "failure of all nodes". 0 is inclusive since I use it to cover the case "no damage" when the fraction of failed nodes is 0.

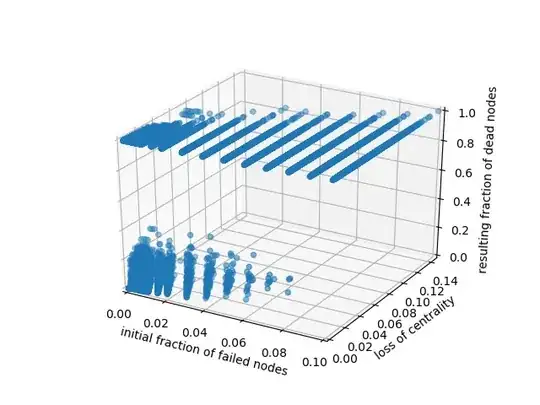

Here's an example mapping 2 features (x and y axes) to the actual simulation result (z axis). I plotted the scatter and the projection of the points. It looks like something that can be learned, right?

I'm using multivariate linear regression in scikit-learn to train a predictor. I take my training set, pick the 2 features I want, use them to make a 4th degree polynomial, which I then standardize and use to train my model.

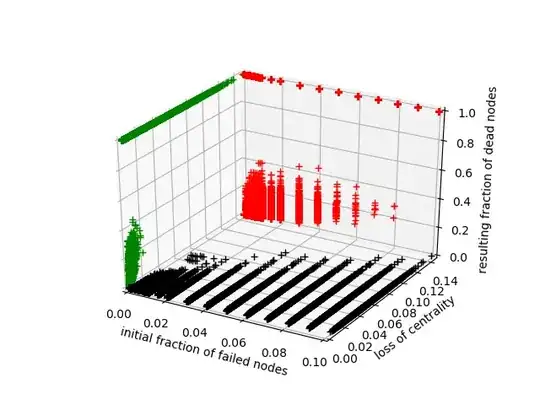

The problem is, for some combinations of my 2 feature values, the predictor outputs negative values or values greater than 1. I can post a picture if needed.

I've tried changing the degree of the polynomial, using regularization (lasso, ridge and ElasticNet) and applying normalization. Nothing completely fixes the problem, especially for predictions >1.

Is linear regression the wrong tool for predicting values in a range?

Any suggestion for a drop-in replacement?

I'm tempted to try logistic regression, but it does not sound like the right tool, since it's for classification, and it outputs probabilities, while my values have a different meaning (not a "chance" but a degree of damage).