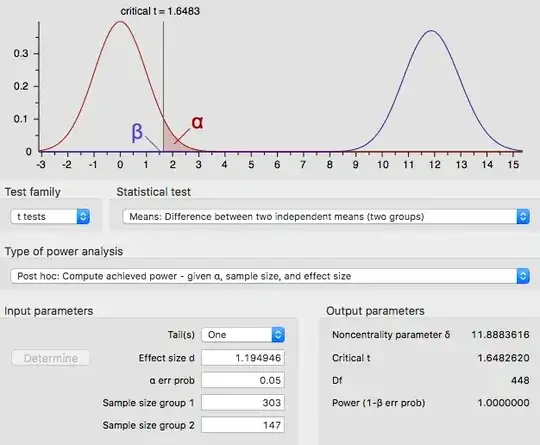

I'm using a program called GPower to calculate the achieved power of a certain test I've performed. Below is the screen shot of my inputs and outputs.

It states that my test achieved a power of 1.0 - meaning there is a ZERO percent chance of a Type II error occurring. I've never seen it described in this way before, just as I've never seen a p-value = 0 (although I'm aware this can happen under extreme circumstances). Is this answer correct, and if so, how can a test be so confident in its results?