I have a computer program.

I profile it with a test bench like:

for (size_t ii = 0U; ii < LOOP; ++ii) {

clock_gettime(CLOCK_MONOTONIC, &time_start[ii]);

do_work();

clock_gettime(CLOCK_MONOTONIC, &time_finish[ii]);

}

However, for microbenchmarking purposes clock_gettime starts to interfere and the x86 rdtsc counter has oddities that make it annoying to use.

Is it valid to instead profile:

for (size_t ii = 0U; ii < LOOP; ++ii) {

clock_gettime(CLOCK_MONOTONIC, &time_start[ii]);

for (size_t jj = 0U; jj < INNER_LOOP; ++jj) {

do_work();

}

clock_gettime(CLOCK_MONOTONIC, &time_finish[ii]);

}

and average the time taken using INNER_LOOP?

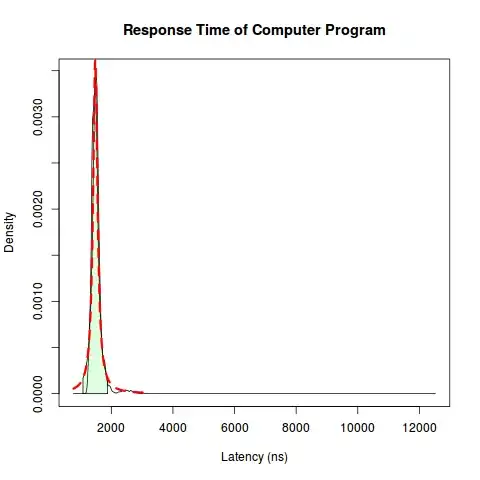

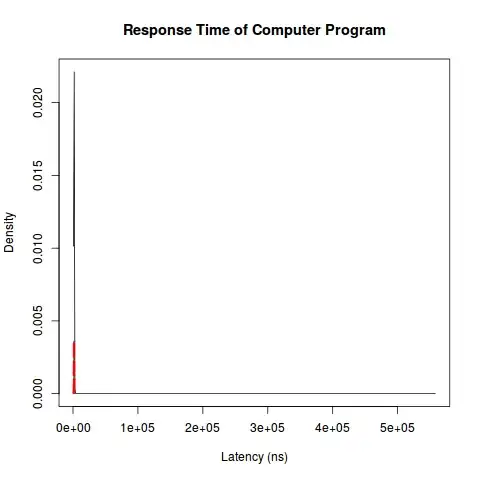

I have a long-tailed distribution that looks like a Cauchy distribution in the beginning.

The actual latencies involved are really large at the deep end though.

The distribution does not seem to follow a power law.

It seems to me that taking the average is inappropriate for a long-tail distribution of my sort. I might need to take the median, maximum, minimum or some other quantity instead. I am not sure of the maths behind why this should be so though and what my alternative are.