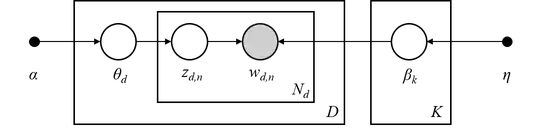

I have been playing with the hyper-parameters of the latent Dirichlet allocation (LDA) model and am wondering how sparsity of topic priors play a role in inference.

I have not performed these experiments on real data, but on simulated data. I started with a fixed vocabulary of fifteen words $(W = 15)$ and generated three dummy topics $(K = 3)$. Then using the generative process of LDA model, generated words $(N = 100)$ and documents $(D = 10)$.

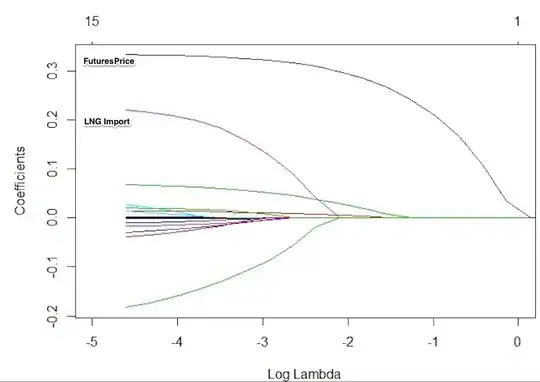

For inference, I am using the collapsed Gibbs sampler by Griffiths et al., 2004. I kept the hyper-parameter $\alpha$ the same, but used a range of values for $\eta$, which is the Dirichlet hyper-parameter for the prior on the topics. Here are some results:

I know that the figures are busy, so let me explain what they are. The first column (blue) in both figures refers to the "True" topics which were used to generate the documents. In Figure 1, the topics are chosen to be sparse, whereas in Figure 2 they are not sparse. Each of the red columns following the blue column are inferred topics for following values of $\eta = [0.1, 0.3, 0.5, 0.7, 0.9, 1, 3, 5, 7, 9]$

Here are my observations from the figures:

- When underlying topics are sparse, the LDA model does a pretty good job of inferring topics as long as the prior is chosen to be sparse, i.e. $\eta < 1$.

- When the underlying topics are not sparse, for $\eta < 1$, the model infers words with high probability pretty well, but the words with less probability are not so well represented in the topics, even with non-sparse priors, i.e. $\eta > 1$.

I think I follow that a sparsity assumption on topics is a fair one, especially for large text corpora which have a lot of words. But if the underlying topics are not so sparse, will the LDA model not be able to infer topics correctly?

EDIT 1:

I changed the figure so that the inferred topics are now arranged based on the best matching true topics using KL-Divergence between the true topics and the inferred topics. Any edit suggestions to help improve my question are welcome.