What is the feature_log_prob_ attribute of sklearn.naive_bayes.MultinomialNB() and how to read it?

-

1http://scikit-learn.org/stable/modules/generated/sklearn.naive_bayes.MultinomialNB.html – Taylor Mar 07 '17 at 21:07

3 Answers

Models like logistic regression, or Naive Bayes algorithm, predict the probabilities of observing some outcomes. In standard binary regression scenario the models give you probability of observing the "success" category. In multinomial case, the models return probabilities of observing each of the outcomes. Log probabilities are simply natural logarithms of the predicted probabilities.

- 108,699

- 20

- 212

- 390

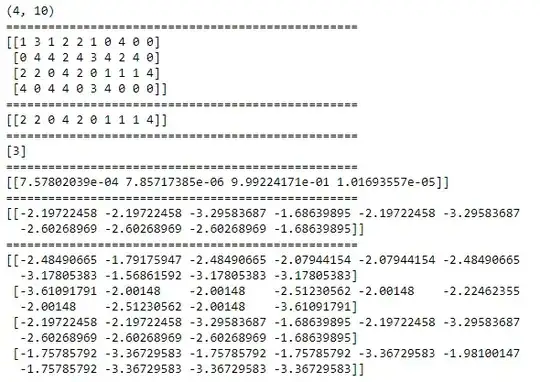

print the shape of the result(feature_log_prob) it will give (number of classes, number of features) if we consider y - no of classes x - no of features and if we print entire result, it will print y rows with x cols, it means for each row, it is printing the Empirical log probability of features given a class, P(x_i|y)... here x_i means ith col/feature and y means row/class .... see below sample code and output for clear understanding

It is $P(x_i|y)$ where $x_i$ is the $i$th feature and $y$ is the class.

This example shows its use:

import numpy as np

X = np.random.randint(5, size=(6, 100))

y = np.array([1, 2, 3, 4, 5, 6])

from sklearn.naive_bayes import MultinomialNB

clf = MultinomialNB()

nb = clf.fit(X, y)

print 'X ->\n', X

print 'y ->\n', y

print 'TEST -'

print(X[2:3])

print(clf.predict(X[2:3]))

print(clf.predict_proba(X[2:3]))

print ((nb.feature_log_prob_)[2:3])

- 20,678

- 23

- 92

- 180