Anchors Explained

Anchors

For the time being, ignore the fancy term of "pyramids of reference boxes", anchors are nothing but fixed-size rectangles to be fed to the Region Proposal Network. Anchors are defined over the last convolutional feature map, meaning there are $(H_{featuremap}*W_{featuremap})*(k)$ of them, but they correspond to the image. For each anchor then the RPN predicts the probability of containing an object in general and four correction coordinates to move and resize the anchor to the right position. But how does the geometry of anchors have to do anything with the RPN?

Anchors Actually Appear in the Loss function

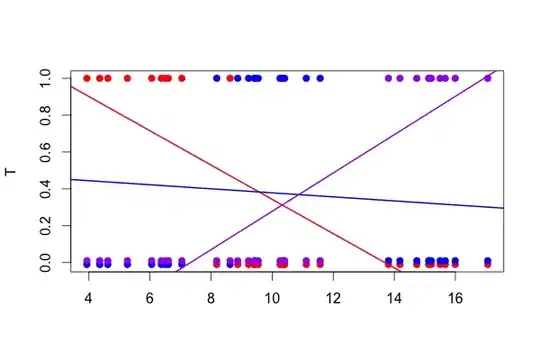

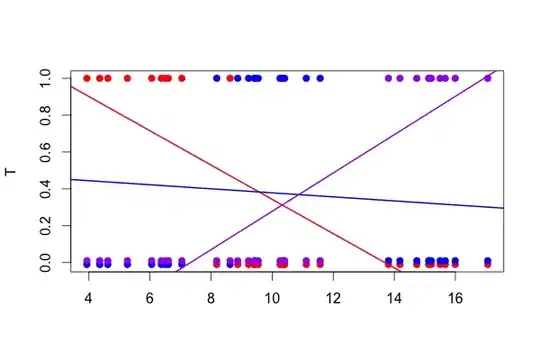

When training the RPN, first a binary class label is assigned to each anchor. Anchors with Intersection-over-Union (IoU) overlap with a ground-truth box, higher than a certain threshold, are assigned a positive label (likewise anchors with IoUs less than a given threshold will be labeled Negative). These labels are further used to compute the loss function:

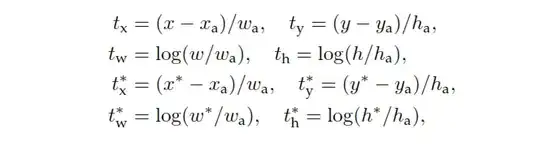

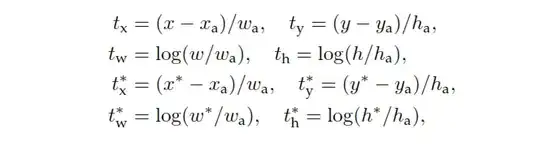

$p$ is the classification head output of the RPN that determines the probability of the anchor to contain an object. For anchors labeled as Negative, no loss is incurred from regression — $p^*$, the ground-truth label is zero. In other words the network does't care about the outputted coordinates for negative anchors and is happy as long as it classifies them correctly. In case of positive anchors, regression loss is taken into account. $t$ is the regression head output of the RPN, a vector representing the 4 parameterized coordinates of the predicted bounding box. The parameterization depends on the anchor geometry and is as follows:

where $x, y, w,$ and h denote the box’s center coordinates and its width and height. Variables $x, x_a,$ and $x^*$ are for the predicted box, anchor box, and ground-truth box respectively (likewise for $y, w, h$).

Also notice anchors with no label are neither classified nor reshaped and the RPM simply throws them out of computations. Once the RPN's job is done, and the proposals are generated, the rest is very similar to Fast R-CNNs.