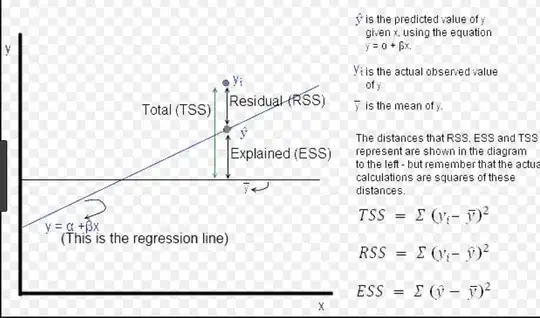

From Wikipedia: https://en.wikipedia.org/wiki/Residual_sum_of_squares, the RSS is the average squared error between true value $y$, and the predicted value $\hat y$.

Then according to: https://en.wikipedia.org/wiki/Total_sum_of_squares, the TSS is the squared error between the true value $y$, and the average of all $y$.

However, I don't understand this line under the explanation for TSS:

[...] the total sum of squares equals the explained sum of squares plus the residual sum of squares.

If we plot RSS on the graph, it would look like:

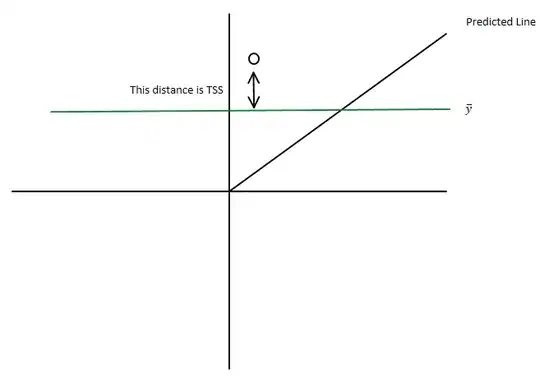

TSS Plot:

ESS Plot:

According to the images, the residual (unexplained) value is actually larger than the TSS. Is there something I'm not following?