I am comparing two categories (A and B) of social media posts with the corresponding number of likes for each one. I am taking samples of equal size from each category at random and performing a Mann-Whitney U-test using scipy.stats module in Python.

I have chosen U-test since taking the mean of A or B data does not make a lot of sense in my case and I have been relying on medians so far for the comparisons.

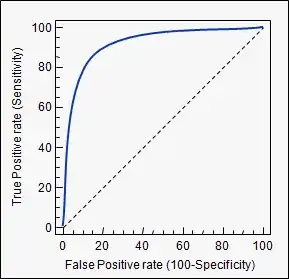

I was performing the test with sample sizes in range of 20-100 which gave the expected results that the two categories were similar. So I decided to try larger samples. With sample sizes >= 200, the p-value of the U-statistic was < .05, which could indicate that the distributions of the two samples might be different (at alpha = 5%). However, the visual analysis of the samples (n = 200) shows otherwise, i.e. the difference in the two distributions is very minuscule (see below). Is there something I'm not getting/doing wrong/misinterpreting? Thanks a bunch in advance.