You can think of the cluster assigned to each point as a class label. This will let you address things the same way you would for a classification problem: "what are the features that distinguish these classes?". This perspective was also mentioned by ttnphns in the comments. Here are a few things that come to mind.

Look at the marginal distributions

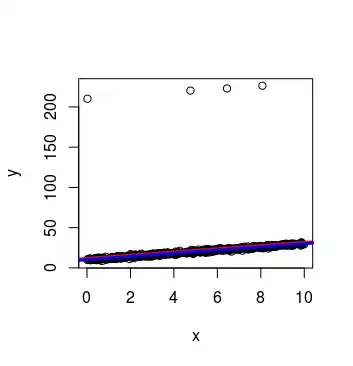

Plot the conditional distribution of each feature, given that the class label takes a particular value. You can also plot the probability that the class takes a particular value as a function of the value of each feature. My post here shows some examples and how to generate these plots. This will show the relationship between the class label and each feature individually.

You could also construct the same type of plot for pairs of features (e.g. using 2d contour plots). Or, make 2d/3d scatterplots for pairs/triplets of features, with points colored by class label. The problem is that the number of pairs/triplets of features can be quite large.

To summarize everything, calculate some statistic measuring the strength of association between class label and each individual feature. Plot a bar chart showing the statistic for each feature (possibly with error bars, hypothesis tests, etc.)

The marginal distributions are nice and easy to interpret, with the caveat that they ignore dependencies between features (e.g. a feature on its own might contain no information about the class, but be highly informative in combination with other features).

Fit an easily interpretable classifier

Fit a classifier to the cluster labels. Use a classifier whose parameters are easy to interpret in terms of the original input features. For example, kernelized SVMs and neural nets would be bad choices. But, something like linear discriminant analysis would work well because it's a linear method, and the weight vectors tell you something about the the features that are relevant to the classification. Logistic regression seems like a good choice for the same reason. $\ell_1$ penalized logistic regression could be even better, because the sparse weights would let you explain the class in terms of a small number of features. Linear methods are easiest to interpret, but may not be able to fit the class labels well enough if the 'true' decision boundary is strongly nonlinear. Unfortunately, many nonlinear classifiers don't provide an easy interpretation in terms of the input features. However, decision trees could be a good option.

With this general approach, you may have to decide how much you're willing to trade off between goodness of fit and ease of interpretation.

Fit any classifier and compute variable importance measures

Fit any classifier to the cluster labels, including one without easily interpretable parameters. This can give a good fit, even in the case where the 'true' decision boundary is very complex. Using the classifier, compute some 'variable importance measure' for each feature. This quantifies how strongly the classifier's output depends on each feature. Various such measures exist. Some are based on permuting or dropping each feature, possibly retraining the model, then measuring how classification accuracy degrades. A well known variant of this technique is often described in the context of random forests. For differentiable models like neural nets, one can compute things like the average magnitude of the gradient of the output w.r.t. the input. Each of these measures is subject to important caveats and pitfalls in how they're computed and interpreted, so caution is advised.

This approach measures how much each feature matters. But, unlike the methods above, it doesn't tell you how it matters.