With assume that you know the exactly reason for using PCA ,First of all assume that we have a our X matrix contains our data ( we have d features):

$$

X =

\begin{array}{cc}

x_1^1 & x_2^1 & ... & x_d^1 \\

x_2^1 & x_2^2 & ... & x_d^2 \\

...\\

x_n^1 & x_n^2 & ... & x_d^n\\

\end{array}

$$

We should compute a vector of all feature Mean :

$$

E[X] = \mu = [\mu_1,...,\mu_d]

$$

and compute matrix of all feature Covariance :

$$

Cov(x) = E[(x-\mu)(x-\mu)^T]

$$

now we have Covariance matrix we should compute eigenvectors and eigenvalues of Covariance Matrix , for this d*d matrix we have d eigenvalues , sort them from highest to lowest , now here is the PCA trick , we can ignore some of eigenvalues that are below a threshold because the eigenvectors belongs to them represents the feature that have lowest variance between data , and that feature is not very handy for classify objects :

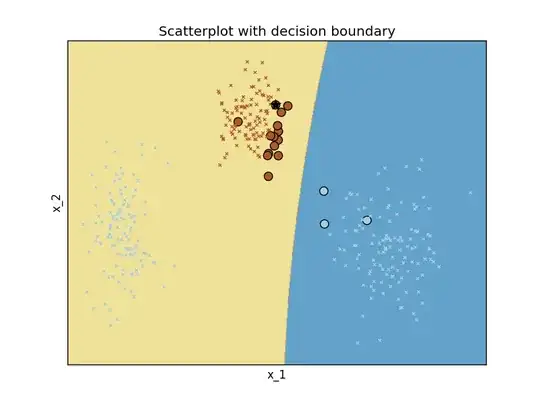

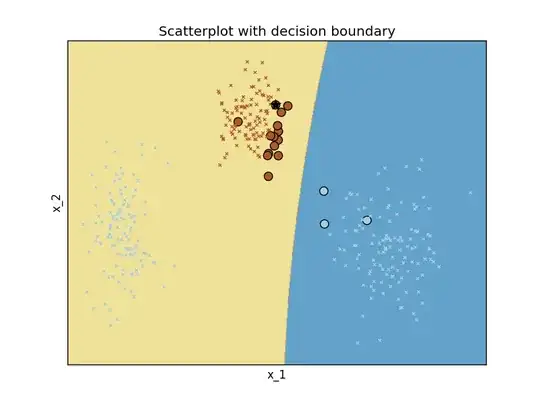

for example here PC1 is more useful for classify objects than PC2 with respect to data distribution towards that axis.now you have 4 data with 3 feature you can apply PCA to it to clarify which feature is not very helping you for classifying objects , so you can ignore it , in PCA you lose some of accuracy to obtain performance and simplifying computation.