I have just started Andrew Ng's Machine Learning course of which a legacy version is online. Here are the notes I am using, it is lecture two of the series on youtube too:

https://see.stanford.edu/materials/aimlcs229/cs229-notes1.pdf (around page 5)

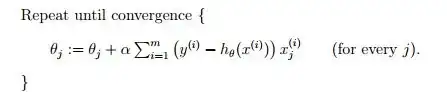

He derives an equation for LMS/ gradient descent. I thought I understood the derivation until the $x^{(i)}_j$ (raised i, lowered j) I do not understand what this means! I thought it was perhaps a matrix index but that would be both lowered, it is to do with the summation and the iteration separately, I believe, but do not understand what this would represent (is the last $x$ on the right meaning $x_1, x_2,\dots, x_n$ summed but then what is the $j$?). A simple example would help! If anyone could help out that would be much appreciated. Thanks