I'm working with a dataset of bank loans, trying to predict which loans are going to default based on some pre-loan-subscription features (for instance, what's the credit grade of the borrower, or the amount of the loan, or the borrower's annual income...).

There are roughly 800.000 data points (one for each loan) and about $7\%$ of them are in default (which is a boolean True/False value).

I'm using regression algorithms to output a probability of default for each loan. For instance, given its features values, a particular loan will be assigned a probability of $p = 0.234$ to default. This works pretty well because, for instance, of all loans whose predictions satisfy $p \in [0.20, 0.30[$ in the test set, roughly $25\%$ of them are effectively in default, and so on.

The problem I'm facing is to find a suitable error function to estimate the algorithms' accuracy. Currently I'm using mean absolute error (MAE) which has the following issue: as most loans were not defaulted, if I arbitrarily assign a probability of $p = 0.0$ for every loan, then the mean absolute error will end up being very low, because obviously $p = 0.0$ will be the perfect prediction value for $93\%$ of loans.

What would then be a suitable error function so that its lowest is not when all predictions are arbitrarily set as low as possible, but accurately reflects the quality of the predictions?

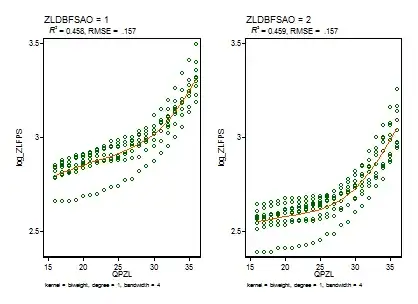

To give an illustration of the algorithms output, here are some results, showing the amount of loans predicted for each estimated probability range, and the corresponding proportion of those who have effectively defaulted.