The math needed to obtain an exact result is messy, but we can derive an exact value for the expected squared correlation coefficient relatively painlessly. It helps explain why a value near $1/2$ keeps showing up and why increasing the length $n$ of the random walk won't change things.

There is potential for confusion about standard terms. The absolute correlation referred to in the question, along with the statistics that make it up--variances and covariances--are formulas that one can apply to any pair of realizations of random walks. The question concern what happens when we look at many independent realizations. For that, we need to take expectations over the random walk process.

(Edit)

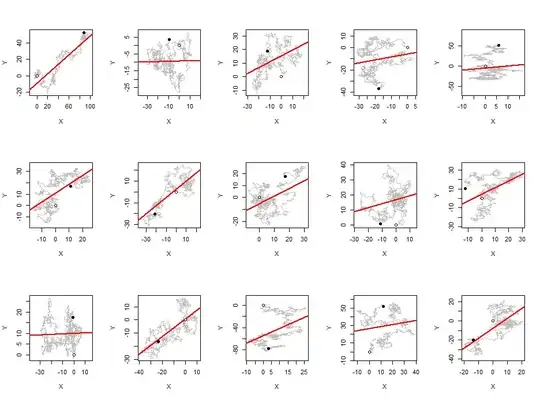

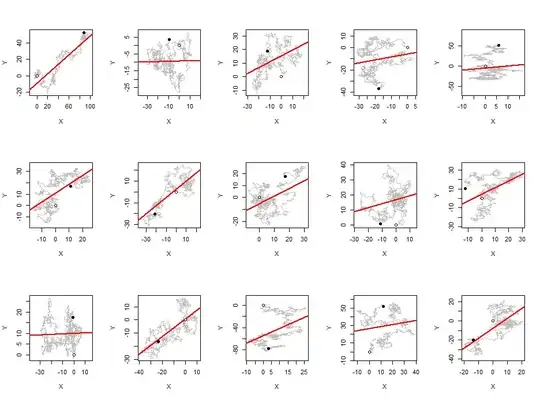

Before we proceed, I want to share some graphical insights with you. A pair of independent random walks $(X,Y)$ is a random walk in two dimensions. We can plot the path that steps from each $(X_t,Y_t)$ to $X_{t+1},Y_{t+1}$. If this path tends downwards (from left to right, plotted on the usual X-Y axes) then in order to study the absolute value of the correlation, let's negate all the $Y$ values. Plot the walks on axes sized to give the $X$ and $Y$ values equal standard deviations and superimpose the least-squares fit of $Y$ to $X$. The slopes of these lines will be the absolute values of the correlation coefficients, lying always between $0$ and $1$.

This figure shows $15$ such walks, each of length $960$ (with standard Normal differences). Little open circles mark their starting points. Dark circles mark their final locations.

These slopes tend to be pretty large. Perfectly random scatterplots of this many points would always have slopes very close to zero. If we had to describe the patterns emerging here, we might say that most 2D random walks gradually migrate from one location to another. (These aren't necessarily their starting and endpoint locations, however!) About half the time, then, that migration occurs in a diagonal direction--and the slope is accordingly high.

The rest of this post sketches an analysis of this situation.

A random walk $(X_i)$ is a sequence of partial sums of $(W_1, W_2, \ldots, W_n)$ where the $W_i$ are independent identically distributed zero-mean variables. Let their common variance be $\sigma^2$.

In a realization $x = (x_1, \ldots, x_n)$ of such a walk, the "variance" would be computed as if this were any dataset:

$$\operatorname{V}(x) = \frac{1}{n}\sum (x_i-\bar x)^2.$$

A nice way to compute this value is to take half the average of all the squared differences:

$$\operatorname{V}(x) = \frac{1}{n(n-1)}\sum_{j \gt i} (x_j-x_i)^2.$$

When $x$ is viewed as the outcome of a random walk $X$ of $n$ steps, the expectation of this is

$$\mathbb{E}(\operatorname{V}(X)) = \frac{1}{n(n-1)}\sum_{j \gt i} \mathbb{E}(X_j-X_i)^2.$$

The differences are sums of iid variables,

$$X_j - X_i = W_{i+1} + W_{i+2} + \cdots + W_j.$$

Expand the square and take expectations. Because the $W_k$ are independent and have zero means, the expectations of all cross terms are zero. That leaves only terms like $W_k$, whose expectation is $\sigma^2$. Thus

$$\mathbb{E}\left((X_j - X_i)^2\right) =\mathbb{E}\left((W_{i+1} + W_{i+2} + \cdots + W_j)^2\right)= (j-i)\sigma^2.$$

It easily follows that

$$\mathbb{E}(\operatorname{V}(X)) = \frac{1}{n(n-1)}\sum_{j \gt i} (j-i)\sigma^2 = \frac{n+1}{6}\sigma^2.$$

The covariance between two independent realizations $x$ and $y$--again in the sense of datasets, not random variables--can be computed with the same technique (but it requires more algebraic work; a quadruple sum is involved). The result is that the expected square of the covariance is

$$\mathbb{E}(\operatorname{C}(X,Y)^2) = \frac{3n^6-2n^5-3n^2+2n}{480n^2(n-1)^2}\sigma^4.$$

Consequently the expectation of the squared correlation coefficient between $X$ and $Y$, taken out to $n$ steps, is

$$\rho^2(n) = \frac{\mathbb{E}(\operatorname{C}(X,Y)^2)}{\mathbb{E}(\operatorname{V}(X))^2} = \frac{3}{40}\frac{3n^3-2n^2+3n-2}{n^3-n} = \frac{9}{40}\left(1+O\left(\frac{1}{n}\right)\right).$$

Although this is not constant, it rapidly approaches a limiting value of $9/40$. Its square root, approximately $0.47$, therefore approximates the expected absolute value of $\rho(n)$ (and underestimates it).

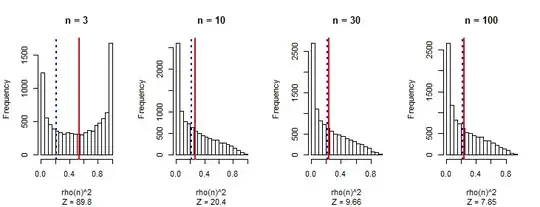

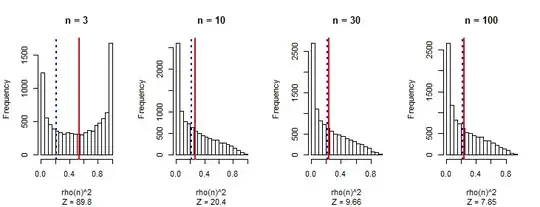

I am sure I have made computational errors, but simulations bear out the asymptotic accuracy. In the following results showing the histograms of $\rho^2(n)$ for $1000$ simulations each, the vertical red lines show the means while the dashed blue lines show the formula's value. Clearly it's incorrect, but asymptotically it is right. Evidently the entire distribution of $\rho^2(n)$ is approaching a limit as $n$ increases. Similarly, the distribution of $|\rho(n)|$ (which is the quantity of interest) will approach a limit.

This is the R code to produce the figure.

f <- function(n){

m <- (2 - 3* n + 2* n^2 -3 * n^3)/(n - n^3) * 3/40

}

n.sim <- 1e4

par(mfrow=c(1,4))

for (n in c(3, 10, 30, 100)) {

u <- matrix(rnorm(n*n.sim), nrow=n)

v <- matrix(rnorm(n*n.sim), nrow=n)

x <- apply(u, 2, cumsum)

y <- apply(v, 2, cumsum)

sim <- rep(NA_real_, n.sim)

for (i in 1:n.sim)

sim[i] <- cor(x[,i], y[,i])^2

z <- signif(sqrt(n.sim)*(mean(sim) - f(n)) / sd(sim), 3)

hist(sim,xlab="rho(n)^2", main=paste("n =", n), sub=paste("Z =", z))

abline(v=mean(sim), lwd=2, col="Red")

abline(v=f(n), col="Blue", lwd=2, lty=3)

}