Objective

To confirm if the understanding is correct regarding the reason why Lagrangian Dual is used in SVM.

Background

While Machine Learning, gradient descent is used at regression, logistic regression, back propagation, etc. However for SVM, suddenly Lagrangian Dual pops up. Trying to understand why.

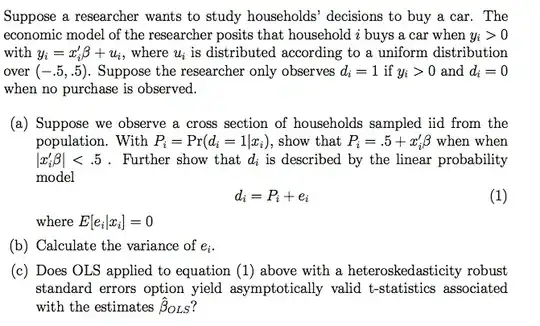

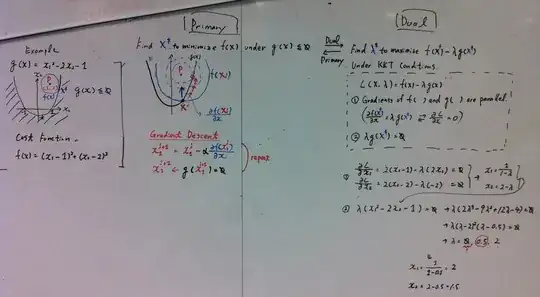

It looks to me gradient descent is possible to solve "primary" issue of SVM as in the left side of the image below (please correct if it is wrong). However never saw any articles or books which mention using gradient descent. Instead, always Lagrangian Dual and KKT conditions pop up out of blue and do not explain well why.

Question

I suppose using the dual reduces the dimensions , e.g (x1, x2) to λ in the image, and results in a formula that produces definite solutions.

- Is it the only reason to use Lagrangian Dual, or are there any other reasons?

- Is there any reason why Gradient Descent cannot be used?