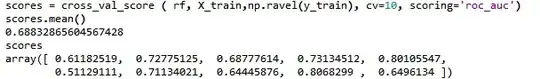

I use cross validation to find a best set of parameters for random forest on my dataset. Then I use the best model to fit my train set and got an average AUC of 0.6883. But I can see the variability of the AUC scores was significant, from 0.5113 to 0.8068. Can I claimed my model performance was 0.8068 (as what it achieved best) and use the corresponding model estimator to fit the test set for prediction?

Asked

Active

Viewed 164 times

1

-

Apply the best model to a new data set (not used before) and see how it performs. – user9292 Jan 09 '17 at 23:25

-

2I am bit confused about what you try to achieve. The model performance on the training set is *effectively useless* to report/claim/showcase. One should use the test set to "conclude a model's performance". One gets the best parameters based on a CV procedure on the training set and then uses the best CV parameters to fit an RF model on the test set. That's all to it. If $0.806...$ is the optimal AUC (assuming we want to judge things using AUC) we get the correspond parameters and run with those. The actual value $0.806...$ is irrelevant though, it the test-set AUC we care for. – usεr11852 Jan 10 '17 at 00:03

-

@usεr11852 Ok, so you mean the cross validation was only used to identify the best set of parameters? If so, a same problems arise here: like what we see in the process of cross validation, different training set led to different AUC. So I used the best model to fit the test set, if I got AUC 0.5, can I conclude that this model fit the data poorly? But if I changed my train test split, a same model may reach a higher AUC and led to different conclusion. – LUSAQX Jan 10 '17 at 00:28

-

Yes, you are correct. The CV procedure is only used to identify the best set of parameters. Yes, an AUC value of ~0.5 is probably weak. Yes, that is an inherit issue with CV. Side comment: I *almost always* use repeated cross-validation, I do not put great trust to single runs of a CV routine. – usεr11852 Jan 10 '17 at 10:05

-

I think it is reasonably to assume that the variance of AUC on the test set (or out-of-sample) is going to be reasonably similar to the variance in AUC you saw during cross-validation (I think this is what you were actually trying to ask in your question). – rinspy Jul 24 '17 at 08:53

1 Answers

1

As mentioned in the comments, you cannot use your training set to claim anything about your model's performance. You need to use a cross-validation scheme there as well. This is called nested cross validation.

See the following great question and answers for more details: Nested cross validation for model selection