The general intuition is that you can relate these moments using the Pythagorean Theorem (PT) in a suitably defined vector space, by showing that two of the moments are perpendicular and the third is the hypotenuse. The only algebra needed is to show that the two legs are indeed orthogonal.

For the sake of the following I'll assume you meant sample means and variances for computation purposes rather than moments for full distributions. That is:

$$

\begin{array}{rcll}

E[X] &=& \frac{1}{n}\sum x_i,& \rm{mean, first\ central\ sample\ moment}\\

E[X^2] &=& \frac{1}{n}\sum x^2_i,& \rm{second\ sample\ moment\ (non-central)}\\

Var(X) &=& \frac{1}{n}\sum (x_i - E[X])^2,& \rm{variance, second\ central\ sample\ moment}

\end{array}

$$

(where all sums are over $n$ items).

For reference, the elementary proof of $Var(X) = E[X^2] - E[X]^2$ is just symbol pushing:

$$

\begin{eqnarray}

Var(X) &=& \frac{1}{n}\sum (x_i - E[X])^2\\

&=& \frac{1}{n}\sum (x^2_i - 2 E[X]x_i + E[X]^2)\\

&=& \frac{1}{n}\sum x^2_i - \frac{2}{n} E[X] \sum x_i + \frac{1}{n}\sum E[X]^2\\

&=& E[X^2] - 2 E[X]^2 + \frac{1}{n} n E[X]^2\\

&=& E[X^2] - E[X]^2\\

\end{eqnarray}

$$

There's little meaning here, just elementary manipulation of algebra. One might notice that $E[X]$ is a constant inside the summation, but that is about it.

Now in the vector space/geometrical interpretation/intuition, what we'll show is the slightly rearranged equation that corresponds to PT, that

$$

\begin{eqnarray}

Var(X) + E[X]^2 &=& E[X^2]

\end{eqnarray}

$$

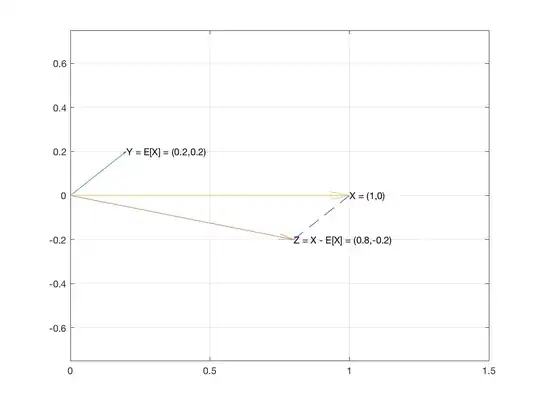

So consider $X$, the sample of $n$ items, as a vector in $\mathbb{R}^n$. And let's create two vectors $E[X]{\bf 1}$ and $X-E[X]{\bf 1}$.

The vector $E[X]{\bf 1}$ has the mean of the sample as every one of its coordinates.

The vector $X-E[X]{\bf 1}$ is $\langle x_1-E[X], \dots, x_n-E[X]\rangle$.

These two vectors are perpendicular because the dot product of the two vectors turns out to be 0:

$$

\begin{eqnarray}

E[X]{\bf 1}\cdot(X-E[X]{\bf 1}) &=& \sum E[X](x_i-E[X])\\

&=& \sum (E[X]x_i-E[X]^2)\\

&=& E[X]\sum x_i - \sum E[X]^2\\

&=& n E[X]E[X] - n E[X]^2\\

&=& 0\\

\end{eqnarray}

$$

So the two vectors are perpendicular which means they are the two legs of a right triangle.

Then by PT (which holds in $\mathbb{R}^n$), the sum of the squares of the lengths of the two legs equals the square of the hypotenuse.

By the same algebra used in the boring algebraic proof at the top, we showed that we get that $E[X^2]$ is the square of the hypotenuse vector:

$(X-E[X])^2 + E[X]^2 = ... = E[X^2]$ where squaring is the dot product (and it's really $E[x]{\bf 1}$ and $(X-E[X])^2$ is $Var(X)$.

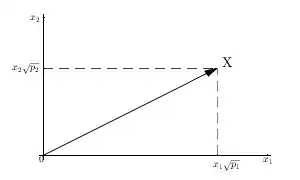

The interesting part about this interpretation is the conversion from a sample of $n$ items from a univariate distribution to a vector space of $n$ dimensions. This is similar to $n$ bivariate samples being interpreted as really two samples in $n$ variables.

In one sense that is enough, the right triangle from vectors and $E[X^2]$ pops out as the hypotnenuse. We gave an interpretation (vectors) for these values and show they correspond. That's cool enough, but unenlightening either statistically or geometrically. It wouldn't really say why and would be a lot of extra conceptual machinery to, in the end mostly, reproduce the purely algebraic proof we already had at the beginning.

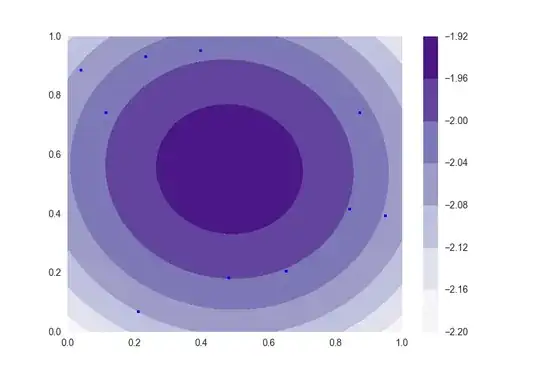

Another interesting part is that the mean and variance, though they intuitively measure center and spread in one dimension, are orthogonal in $n$ dimensions. What does that mean, that they're orthogonal? I don't know! Are there other moments that are orthogonal? Is there a larger system of relations that includes this orthogonality? central moments vs non-central moments? I don't know!