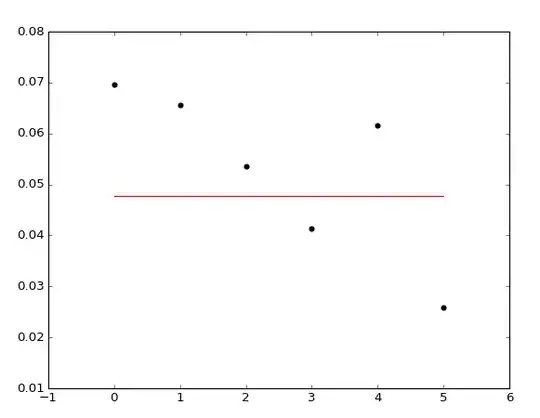

I am trying to make a regression with SVR and I found a problem in the process, the regression with random data is ok, but I tried it with my data, and with all of these three kernels the prediction's output is constant (see the plot). Here is a piece of my data, maybe the problem is here, but I cant'see why.

data.csv

2006,46,97,97,0.04124

2006,47,97,97,0.06957

2006,48,115,97,0.06569

2006,49,137,115,0.05357

2006,50,112,137,0.04132

2006,51,121,112,0.06154

2006,52,130,121,0.02586

And here is the code I'm using.

import pandas as pd

from sklearn.svm import SVR

import matplotlib.pyplot as plt

import numpy as np

#Importing data

data = pd.read_csv('data.csv')

data = data.as_matrix()

#Random data generator

#datar = np.random.random_sample((7,21))

#inputdatar = datar[:,0:4]

inputdata = data[:,0:4]

output1 = data[:,4]

svr_rbf = SVR(kernel='rbf',gamma=1)

svr_rbf.fit(inputdata,output1)

pre = svr_rbf.predict(inputdata)

axis = range(0,data.shape[0])

plt.scatter(axis, output1, color='black', label='Data')

plt.plot(axis, pre, color='red', label='Regression')

plt.show()

I think maybe it's hyperparameter tuning problem, but I'm not sure if the data would cause a problem as well. Any lights?