When I trained ANN model, sometime training set has high R-square but testing set has low R-square. How to explain this situation?

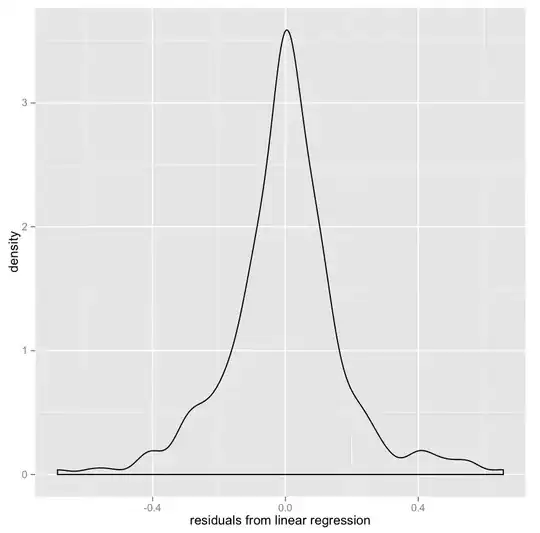

Is there any over-fitting problem? (Show in figure)

- 5

- 5

2 Answers

In addition to what written by @enricoanderlini, I would also point out that there don't seem to be many datapoints in your test set. Having a (small) number of points, exposes you, even more, to the impact of leverage / influential points (you can also read about it here). With regards to this, @enricoanderlini is correct when he suggests cross-validation.

Interestingly, while the focus is often on model capacity (degrees of freedom), your set-up highlights that ANN calibration, in a train-validate-test pipeline, is "hungrier" of datapoints than, say, just a OLS with a train-test split.

- 1,151

- 7

- 20

The results seem to suggest overfitting is a problem at the moment.

Have you tried to select the training, validation and test sets differently? What I mean is, choose a number of different sets, say 5-10, from the whole data set you have. This way if you observe the same behaviour with all of them you know you have to redesign the NN.

If overfitting is a problem, you may want to decrease the number of neurons.

- 258

- 2

- 10

-

Can you give me a example? what is "choose number of different sets"? I can' get it. And how you recognize the overfitting from figure? Thank you, I appreciate. – Jeffrey Dec 29 '16 at 14:18

-

Overfitting occurs when the statistical model describes the noise of the data as well as the general relationship. In a simplistic way, this occurs when you fit the training data "too well", whereas the validation data presents a poorer fit. This is more or less what you observe: the quality of the fit for the training data is excellent, while it shrinks for the validation and test data sets. – Enrico Anderlini Dec 29 '16 at 15:01

-

So, what I suggest you do is: take your data set, order it randomly, select approximately 70-80% of the points as the training set, 20-10% as the validation set and 10% as the test set (you may use other values based on what you find in the literature). Then, you repeat this process another ~4 times. – Enrico Anderlini Dec 29 '16 at 15:04

-

If you observe the same behaviour (i.e. quality of the fit of the validation and test data sets worse than that of the training set) for all repeats, then you understand there is clearly a problem with your model as is, and you need to change it. – Enrico Anderlini Dec 29 '16 at 15:06

-

Some people also take the average from all cases to produce the final model. This is a way to fully exploit a possibly limited data set. I hope this helps. – Enrico Anderlini Dec 29 '16 at 15:07

-

I know you probably have not used Matlab, but I find their documentation neat and concise: https://uk.mathworks.com/help/nnet/ug/improve-neural-network-generalization-and-avoid-overfitting.html – Enrico Anderlini Dec 29 '16 at 15:11

-

Do I need to normalize my data first? Like make each X variable and Y variable value between [0,1] or [-1,1]. Can I use nntool or anything else you can suggest me using? – Jeffrey Jan 01 '17 at 03:13

-

If you intend to use Matlab toolboxes (and have access to them, they can be very expensive), then using nntool is a very clear and simple introduction. If you use the toolbox, the data is automatically normalized (basically, the toolbox does it itself). However, you can do it yourself as well for good practice. – Enrico Anderlini Jan 01 '17 at 17:32

-

The toolbox is very powerful and gives you access to a lot of resources. The GUI is particularly helpful. I would start with feedfoward networks for simple applications. However, once you get the appropriate design, I would remove the GUI in order to speed up the program. – Enrico Anderlini Jan 01 '17 at 17:34

-

There are some very good resources for Python as well. – Enrico Anderlini Jan 01 '17 at 17:34