Q1: Show quantitatively that OLS regression can be applied inconsistently for linear parameters estimation.

OLS in y returns a minimum error regression line for estimating y-values given a fixed x-value, and is most simply derived for equidistant x-axis values. When the x-values are not equidistant, the least error in y estimate is generally not the line corresponding to a best functional relationship between x and y, but remains a least error estimator of y given x, which is fine, if what we want as a regression goal is to estimate y given x, but is not good if we want to see, for example, how method A relates to method B for which a regression treatment that accounts for the variance of both methods is needed to establish their functional interrelationship; codependency.

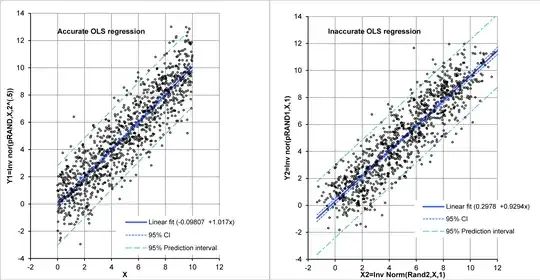

We show an example of how linear OLS does not echo generating slope and intercept in the bivariate case using a Monte Carlo simulation (We are making an example, not a proof here, the question asks for a proof. Note that for low $\text{R}^2$-values the the effect is easy to show for $n$-small, and for higher $\text{R}^2$-values $n$ has to be larger. Here, $\text{R}^2\approx 0.8$. To keep the same $\text{R}^2$ value, among other possibilities, we could keep the same $X$-axis range while we increase $n$. For example, for $n=10,000$ rather than $n=1,000$, we could make $\Delta X1=0.001$).

Code:EXCEL 2007 or higher

A B C D E F

1 X1 RAND1 RAND2 Y1=NORM.INV(RAND1,X1,SQRT(2)) Y2=NORM.INV(RAND1,X1,1) X2=NORM.INV(RAND2,X,1)

2 0 =RAND() =RAND() =NORM.INV(B2,A2,SQRT(2)) =NORM.INV(B2,A2,1) =NORM.INV(C2,A2,1)

3 =A1+0.01 =RAND() =RAND() =NORM.INV(B3,A3,SQRT(2)) =NORM.INV(B3,A3,1) =NORM.INV(C3,A3,1)

4 =A2+0.01 . . . . .

5 =A3+0.01 . . . . .

. . . . . . .

. . . . . . .

1001 9.99 0.391435454 0.466473036 9.60027146 9.714420306 9.905861194

First we construct a regression consistent with least squares in y for both least error in y and also for functional estimation with the correct line parameters for a regression line using a randomized but increasing $Y1$ for increasing $X1$ values, i.e., $X1=\{0,0.01,0.02,0.03,,,9.97,9.98,9.99\}$ from the line $y=X$, where $Y_i$ are randomized $y_i$-values ($\{X1,Y1\}$ in code). We do $n=1000$ times NORM.INV(RAND1, mean=$X_i$, SD=$\sqrt{2}$). From this, as the generating model is $y=X1$, which returns our generating line to within the expected confidence intervals. For our second model, keeping $y=x$, let us vary both $X_i$ and $Y_i$ ($\{X2,Y2\}$ in code), reduce the standard deviations of $X2$ and $Y2$ to 1 maintain the vector sum standard deviation at $\sqrt{2}$ and refit. That gives us the following regression plots.

This gives us the following regression parameters for the monovariate regression case, wherein all of the variability is in the y-axis variable and the least error estimate line for y given x is also the functional relationship between x and y.

Term Coefficient 95% CI SE t statistic DF p

Intercept -0.09807 -0.28222 to 0.08608 0.093842 -1.05 998 0.2962

Slope 1.017 0.985 to 1.048 0.0163 62.50 998 <0.0001

For the bivariate regression line we obtain,

Term Coefficient 95% CI SE t statistic DF p

Intercept 0.2978 0.1313 to 0.4643 0.08486 3.51 998 0.0005

Slope 0.9294 0.9010 to 0.9578 0.01447 64.23 998 <0.0001

From this, we see that the OLS fit does not return a slope of 1, or an intercept of 0, which are the values of the generating function. Thus, the values returned are the least error in y estimators, with reduced slope magnitude of that line compared to the generating function.

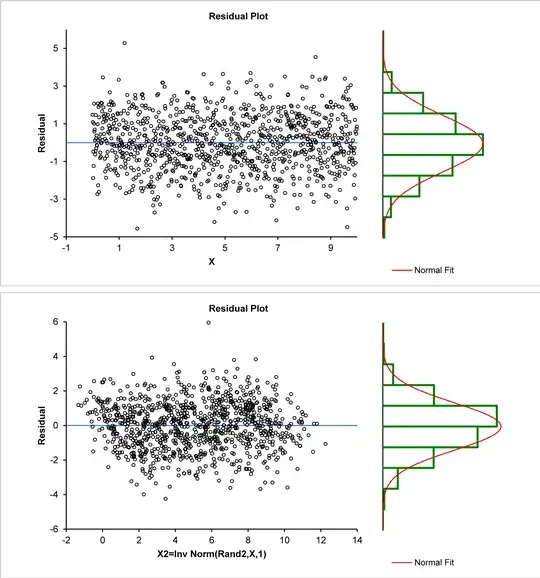

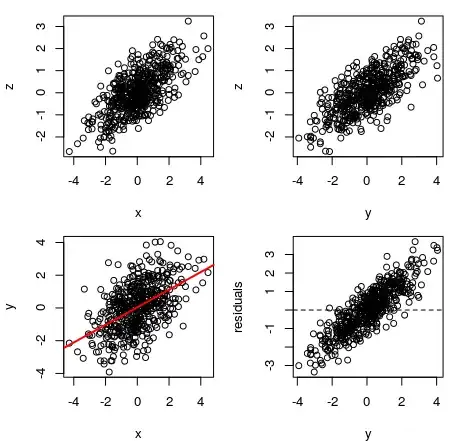

Next, let us examine the residual structure to see the effect of mono-variate randomness in y versus bi-variate randomness in x and y.

The first image above has a rectangular normal distribution residual pattern suggesting appropriate regression. The lower image has a parallelogram structure and a skewed non-normal residual pattern, this is what I called latent information suggesting inaccuracy. Numerically, both mean residuals are near zero ($-2.33924*10^{-16}$, $-3.37952*10^{-16}$), but when normal distributions are (BIC) fit to these residuals the first remains accurate with mean $-2.33924*10^{-16}$ and standard deviation $1.4834$, but the second is a shifted, more borderline normal with mean $0.0879176$ and standard deviation $1.38753$.

Q1: How do we quantify the systemic inaccuracy, shown as an example here, in mathematical form when OLS regression in y is applied to provide not a least error in y estimate line for bivariate data, but a functional relationship between x and y? This means that if we are comparing method A with method B, e.g., cardiac ejection fraction method A, with cardiac ejection fraction method B, we seldom care what the least error estimate of a method B value is given a method A value, we might want to convert between methods or to find the functional relationship between methods, but often we would not care to have one method predict the results of the other.

@Tim below spent a long time discussing what is and is not bias, that there is or is not a problem, that OLS is wrong or not (it is the wrong tool for bivariate data), etc. His efforts are appreciated, however, that material is extraneous to the original intent of the question and has been deleted.