I saw an example on a blog where a k nearest neighbors algorithm was run to predict responses on a test data set.The MSE(mean squared error) of the predictions was larger than the variance of the response variable in the test set. Shouldn't the MSE always be less than the variance of the response variable as SSTO(total sum of squares) = RSS(model sum of squares) + SSE(error sum of squares).

-

1Your question is full of acronyms, please state what's MSE, SSTO, RSS, and SSE, so everyone can understand it, and perhaps it might increase its visibility. – Firebug Dec 13 '16 at 17:07

-

1To the question, I don't even see the reason why *test* mean-squared-error would have to be lesser than *training* data response variance. – Firebug Dec 13 '16 at 17:18

-

@Firebug I am comparing the test mean square error with test data response variance – zorny Dec 13 '16 at 17:21

-

1Okay, but it doesn't change anything. I still can't grasp the premise of this question, why would test MSE have to be smaller than variance? – Firebug Dec 13 '16 at 18:50

-

2In linear regression this makes sense on training data only. – Firebug Dec 13 '16 at 18:51

2 Answers

Case 1:

SST (total) = RSS (model) + SSE (error)

This is only true in general if the model includes an intercept (and, in relation to case 3, if the mean of the errors is 0).

(more precisely, you do not need an explicit intercept, for instance for linear regression it is sufficient that the intercept is in the column space of the regressors)

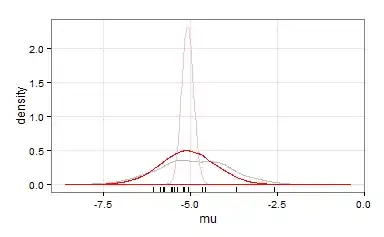

See for instance the following y=bx fit:

Clearly the error is much larger than the total variance in the data.

Case 2:

Other issues may be when you are comparing training vs test, but it is unclear whether this is the case in this question. (there is an explicit 'in the test set' mentioned, but is this the same for all terms?)

(see for instance https://stats.stackexchange.com/a/299523/164061 and the four other links in that post)

Case 3:

In the test set a similar effect may occur as with the lack of the intercept. If the model (which you fitted to the training set, and not to the test set) is not a good fit for the test set, then you may get a larger error than the total variance. (this is what Firebug meant with, 'makes sense to the training data only)

This bad fitting, for which the SSE becomes larger than the SST, is especially likely when you are over-fitting.

Case 4:

If you are fitting a Bayesian model, or some model that has bias (e.g. regularisation) then there is no guarantee that SST (total) > RSS (model).

- 43,080

- 1

- 72

- 161

The MSE is defined as $MSE = N^{-1} \sum_{i=1}^N (y_i - \hat{y}_i)^2$, where $\hat{y}_i$ is the fitted, or predicted, value.

The variance is $V = N^{-1} \sum_{i=1}^N (y_i - \bar{y})^2$, where $ \bar{y} = \sum_{i=1}^N y_i$.

It is true that in most cases, MSE will tend to be smaller than $V$, but it depends inherently on how the model fits the values $\hat{y}_i$ to the data. Given that there is some noise and some signal in the data, it will often be the case that the MSE is smaller, but not always.

- 722

- 5

- 6

-

I understand that the MSR and MSE and be different but since the MSR + MSE = MST shouldn't the variance always be larger? – zorny Dec 13 '16 at 16:56

-

I totally see where the confusion is coming form and I agree with you. But what are MSR and MST? I guess that one of those must be a definition term (the remainder), which must therefore be what can become negative. Perhaps your intuition comes from the linear regression case? – Superpronker Dec 13 '16 at 17:03

-

@Superproner. Yes my intuition comes from linear regression. Please see this link: https://onlinecourses.science.psu.edu/stat501/node/266. MSR is "regression mean square (MSR)" and MST is the mean total sum of squares. Isn't this applicable for any model. Total variance = explained variance + unexplained variance so unexplained variance cannot be greater than total variance – zorny Dec 13 '16 at 17:17

-

I believe that for the linear regression, it is a result (as you say). But for other models, it need not hold. – Superpronker Dec 13 '16 at 18:15

-

-

As I understand it, you are decomposing the variance in $y$, which is essentially $d(y,\bar{y}) = || y - \bar{y}||$, into a distance between $y$ and $\hat{y}$ and a distance between $\hat{y}$ and $\bar{y}$. By the triangle inequality, we know that the difference between two vectors is not greater than the distance where we insert a third point. So $d(y,\bar{y}) \le d(y,\hat{y}) + d(\hat{y},\bar{y})$. And sometimes we have equality here (as for OLS) but not always. – Superpronker Dec 13 '16 at 19:52