You might have a moderator on your hands. See this blog post for information.

To better understand what this means, think of this second variable as masking some of the actual relationship between the original coefficient and your response variable. Lets try a simple example:

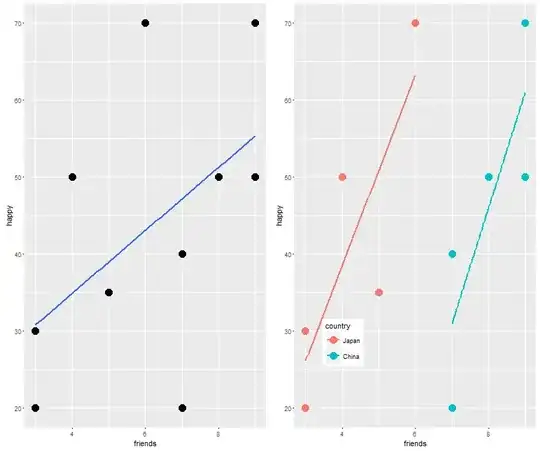

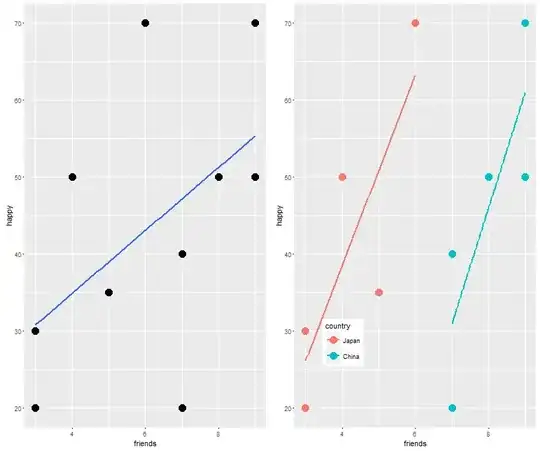

Suppose we have a simple dataset of data trying to explain happiness (presume an index of 0-100) by measuring the amount of friends and country of origin (0=Japan, 1=China).

moderator <- data.frame(friends=c(3,3,4,5,7,7,7,8,9,9), happy = c(20,30,50,35,70,20,40,50,50,70), country = c(0,0,0,0,0,1,1,1,1,1))

moderator$country <- factor(moderator$country, levels = c(0,1), labels = c("Japan", "China"))

Now we will fit a model with just friends and with both friends and country:

Call:

lm(formula = happy ~ friends, data = moderator)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 15.105 14.697 1.028 0.3341

friends 4.580 2.236 2.048 0.0747 .

And

Call:

lm(formula = happy ~ friends + country, data = moderator)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -9.079 13.541 -0.670 0.52406

friends 11.382 2.861 3.978 0.00534 **

country -35.974 12.484 -2.881 0.02360 *

Here too one can see that the coefficient for friends has increased significantly after controlling for country. This makes theoretical sense to call country a moderator. But this isn't very clear yet. so let us do something that is often overlooked - lets plot it (using the ggplot2 package):

p.1 <- ggplot(moderator, aes(x = friends, y = happy)) +

geom_point(size = 5) + geom_smooth(method=lm, se = FALSE)

p.2 <- ggplot(moderator, aes(x = friends, y = happy, colour = country)) +

geom_point(size = 5) + geom_smooth(method=lm, se = FALSE) +

theme(legend.position = c(.2, .2))

grid.arrange(p.1, p.2, ncol=2)

It is clearer now what happened. The original coefficient was small because a second factor - country, moderated the relationship. When we considered it, the coefficient became larger.