Can the unit root test of Dickey-Fuller be used even when the residuals are not normally distributed?

- 132,789

- 81

- 357

- 650

- 51

- 2

-

To make it a more interesting thinking process I ask a couple of simpler question: can we use OLS to estimate a simple bivariate regression when the variables are not normal? What does Gauss-Markov Theorem say about OLS and what assumptions does one make? – Math-fun Jan 09 '17 at 16:06

2 Answers

Yes, that is not a necessary condition. Recall that all we know about the null distribution of the Dickey-Fuller test is its asymptotic representation (although the literature of course considers many refinements).

As is often the case, we do not need distributional assumptions on the error terms when considering asymptotic distributions thanks to (in this case: functional) central limit theory arguments.

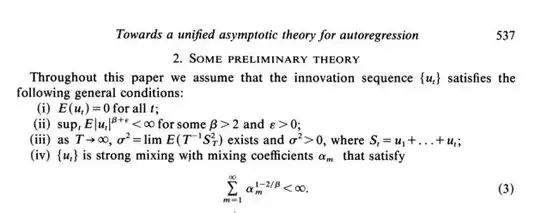

Here is a screenshot from Phillips (Biometrika 1987) stating assumptions on the errors - as you see, these are way broader than requiring normality.

That said, the asymptotic distribution does not have a closed-form solution, so that you need to simulate from the distribution to get critical values (existing infinite series representations are not practical either to generate critical vales). To perform that simulation, you must draw erros from some distribution, and the conventional choice is to simulate normal errors.

But, as Phillips shows, if you were to draw the errors from some other distribution satisfying the above requirements, you would asymptotically get the same distribution. You could replace the line with rnorm(T) with some such distribution in my answer here to verify.

That said, the finite-sample distribution will of course be affected by the error distribution, so that the error distribution will play a role in shorter time series. (Indeed, I did replace rnorm(T) with rt(T, df=8), and differences are still relevant for $T$ as large as 20.000.)

- 25,948

- 3

- 57

- 106

-

I am looking for practical guidelines regarding the use of Dickey-Fuller test, and @mlofton suggests ([here](https://stats.stackexchange.com/questions/474387/whats-the-relationship-between-cointegration-and-linear-regression?noredirect=1#comment890638_474387) and above) that (roughly speaking) normality cannot be assumed away. That seems to clash with your answer. How do I reconcile the two? Is one of these incorrect? – Richard Hardy Aug 12 '20 at 11:20

-

I do not think there is a clash. My answer cites the asymptotic distribution, which does not assume normal errors, as Phillips' theorems unequivocally show. That said, the asymptotic distribution does not have a closed-form solution, so that you need to simulate from the distribution to get critical values (existing infinite series representations are not practical either to generate critical vales). To perform that simulation, you must draw erros from some distribution, and the conventional choice is to simulate normal errors. But, as Phillips shows, if you were to draw the errors from... – Christoph Hanck Aug 12 '20 at 14:30

-

...some other distribution satisfying the above requirements, you would asymptotically get the same distribution. You could replace the line with `rnorm(T)` with some such distribution in my answer here https://stats.stackexchange.com/questions/213551/how-is-the-augmented-dickey-fuller-test-adf-table-of-critical-values-calculate/213589#213589 to verify. That said, the finite-sample distribution will of course be affected by the error distribution, so that the error distribution will play a role in shorter time series. – Christoph Hanck Aug 12 '20 at 14:32

-

Thank you for a very clear explanation! Including it as an update to your answer might be a good idea. – Richard Hardy Aug 12 '20 at 14:53

-

Thanks Christoph: I never disagreed with your answer but thanks for clarification. Generally speaking, as you know, no one would ever use Phillips result because simulating analytically from the derived distribution is not terribly practical. People generally use the DF tables and those have nothing to do with asymptotic representation. They use the normality of the error term and allow the practitioner to obtain DF statistics for sample sizes as low as 20. Thanks though for clarification. – mlofton Aug 13 '20 at 14:26

Yes, the innovations need not be normal, not at all.

The underlying mathematical fact that gives rise to the asymptotic null distribution of the DF statistic is Functional Central Limit Theorem, or Invariance Principle.

The FCLT, if you'd like, is an infinite dimensional generalization of the CLT. The CLT holds for dependent, non-normal sequences, and similar statement can be made about the FCLT.

(Conversely, FCLT implies CLT, as the finite dimensional distribution of the Brownian motion is normal. So any general condition that gives you a FCLT immediately implies a CLT.)

Functional Central Limit Theorem

Given a sequence of random variables $u_i$, $i = 1, 2, \cdots$. Consider the sequence of random functions $\phi_n$, $n = 1, 2, \cdots$, defined by $$ \phi_n(t) = \frac{1}{\sqrt{n}}\sum_{i = 1}^{[nt]} u_i, \; t \in [0,1]. $$ Each $\phi_n$ is a stochastic process on $[0,1]$ with sample paths in the Skorohod space $D[0,1]$.

The generic form of FCLT provides sufficient conditions under which $\{ \phi_n \}$ converges weakly on $D[0,1]$ to (a scalar multiple of) the standard Brownian motion $B$.

Sufficient conditions, which are more general than those from Phillips and Perron (1987) quoted above, were known prior, if not in the time series literature. See, for example, McLiesh (1975):

The strong mixing condition (iv) of Phillips and Perron implies McLiesh's mixingale condition under some conditions.

Condition (ii) of Phillips and Perron requiring uniform bound on $2 + \epsilon$ moments of $\{ u_i\}$ is relaxed in McLeish to the uniform integrability of $\{ u_i^2 \}$.

Condition (iii) of Phillips and Perron is actually not quite correct/sufficient as intended.

For a further milestone in the time series literature in this direction, see Elliott, Rothenberg, and Stock (1996), where they apply a Neyman-Pearson-like approach to benchmark the asymptotic power envelope of unit root tests. The normality assumption is long gone by then.

DF Statistic

It follows immediately from the FCLT and the Continuous Mapping Theorem that the DF $\tau$-statistic $\tau$ has the asymptotic distribution $$ \tau \stackrel{d}{\rightarrow} \frac{\frac12 (B(1)^2 - 1)}{ \sqrt{ \int_0^1 B(t)^2 dt} }. $$ The 5th-percentile of this distribution is the critical value for DF test with nominal size of 5%.

Simulating $\tau$ with an i.i.d. normal error term and another error term that follows, say, a time series specification would lead to the same distribution as sample size gets large.

Comment

I am going to disagree with @mlofton's comment that

Generally speaking, no one would ever use Phillips result because simulating analytically from the derived distribution is not terribly practical. People generally use the DF tables and those have nothing to do with asymptotic representation. They use the normality of the error term and allow the practitioner to obtain DF statistics for sample sizes as low as 20...

It's a major contribution of Phillips to point out that an "assumption free" asymptotic distribution is possible. This is one of the reasons, along with contemporary developments in economic theory, that convinced empirical practitioners (in particular, macro-econometricians) that unit root tests belonged to their everyday toolbox. A statistic (more specifically, a null distribution) that requires normality of the data generating process is not useful at all---e.g. suppose the $t$-statistic is only valid if data is i.i.d. normal. That was the limitation of the early unit root literature.

- 2,853

- 10

- 15

-

Hi Michael: I've already had a long discussion with Richard on this. My point is that, to use Phillips' result to claim that the DF tables don't assume normality, is not the correct thing to do. I'm pretty certain that the DF tables do assume normality ( see the link in Greene that I point to) but, the best thing to do would be to do EXACTLY what DF do, except with non-normal errors, and see if the same critical values are obtained. Note that the limitation of the early unit-root literature was that , under the unit root null, the t-statistic is not t-distributed when the errors are normal. – mlofton Aug 23 '20 at 13:40

-

@mlofton "...to use Phillips' result to claim that the DF tables don't assume normality, is not the correct thing to do"---well, that is simply not true or correct. – Michael Aug 23 '20 at 13:45

-

I think it is but we can agree to disagree. And I'm sorry, the precvious link , I pointed to was DW test rather than DF. When I have time, I'll try to ask someone ( who can point me to somewhere that says yes or no ). whether DF assumes normality of error term. I'm pretty certain it does but the only place where it says so is Greene. As I said, best way to know would be through simulation. – mlofton Aug 23 '20 at 13:55

-

@mlofton The statements in Phillips-Perron clearly says normality is not required at all, that's a basic fact (those results are plainly true, if not exactly under the conditions they claim). "...DF tables do assume normality..."---yes, in Dickey and Fuller (JASA 1979 and Econometrica 1981). That's why unit root tests were not widely considered by empirical folks prior to Phillips---it's not reasonable to have to always assume i.i.d. N(0,sigma^2) errors for a time series. The assumptions and derivations in those papers are very primitive by today's standards. – Michael Aug 23 '20 at 14:15

-

@mlofton For something more "recent", see Elliott, Rothenberg, and Stock *Efficient Tests for an Autoregressive Unit Root* (Econometrica 1996), where they apply a Neyman-Pearson-like approach to benchmark the *asymptotic* power envelope of unit root tests. The normality assumption is long gone by then. – Michael Aug 23 '20 at 18:29

-

Hi Micheal and Richard: I just emailed Jamies Hamilton an he said below so my apologies tp Michael and Richard Hardy. I'm more confused but atleast I know for sure.. Again, my sincere apologies and causing confusion. "DF does not assume normality. It is based on the asymptotic distribution that holds under a variety of settings. An example of conditions under which it is valid are included in my text. Alternative versions of sufficient conditions exist in a number of sources." – mlofton Aug 23 '20 at 19:01

-

@mlofton No problem. The Elliott, Rothenberg, and Stock (1996) citation has been added to the answer. – Michael Aug 23 '20 at 19:32

-

since this uses the CLT Is this impacted by by how many data points you have (I often have 50 or so)? If I understood the comment above you could have as few as 20 points and it would be ok. I assume, since I did not see it mentioned, that the lag structure (the number of lags in the ARDL) does not matter. – user54285 Aug 23 '20 at 19:49

-

@user54285 "...since this uses the CLT..."---it's much more than a CLT. "Is this impacted by by how many data points you have..."---yes, indeed, unit root tests all make use of FCLT for asymptotics and in general are known for small sample distortions regarding their size, and power. This is a caveat I didn't mention. In the theory literature, when a Monte Carlo exercise is performed for a newly proposed unit root test, one rarely see the sample size chosen to be <100. If "you...have as few as 20 points", my null hypothesis would be that any unit root test results are meaningless. – Michael Aug 23 '20 at 20:01

-

In this respect, unit root asymptotics is not unlike, say, that of the t-test. Also, the FCLT is a much stronger result than CLT. So, informally speaking, you would expect the convergence to be slower. If you really believe the error is i.i.d. normal, then the DF tables for finite sample can be used as @mlofton says (or just do a simulation for your given sample size). – Michael Aug 23 '20 at 20:01

-

@user54285 "...lag structure...does not matter"---that's correct, asymptotically. For example, an ARMA error term with innovations which has continuous distributions, that you can simulate, would fall under the Phillips-Perron assumption (more precisely, the corrected formulation of their assumption). – Michael Aug 23 '20 at 20:06

-

thanks Michael. I am not a statistician [I just run statistical models relying on the experts I read such as this forum] and I have yet to learn how to simulate data the way you reference. I have never heard before of a FCLT. You mention a hundred points. I commonly have sixty, is that too few to rely on the FCLT. And if you don't have more than 60 how do you test for non-stationarity? If it matters I use both the ADF and KPPS and look to see if they agree (as I have found suggested given power issues). – user54285 Aug 23 '20 at 20:52

-

@user54285 "I commonly have sixty..."---60 is pushing the lower end of the range where you could be fine (but I, for one, cannot say for sure without knowing more). However, if you look at Nelson and Plosser (1982 JME)---a (very) important early application of unit root testing in the empirical economics literature, they applied the DF test with asymptotic critical values and sample size ranging from 60 to 100. This precedent is in your favor. – Michael Aug 23 '20 at 21:39

-

thanks very much Michael. Now all I have to worry if there are structural breaks in my data which as I understand it creates problems for the unit root tests [although few authors bring this up so maybe its not true] – user54285 Aug 23 '20 at 22:46

-

@user54285 "...structural breaks in my data..."---structural break is an issue. A one-time structural break in otherwise stationary data may lead to a false non-rejection of the unit root null. This was first noted and addressed in Perron (1989 Econometrica) where he proposed a unit root test that accounts for possible structural break under the alternative and applied his test to the Nelson-Plosser dataset. Any statistical software that implements the ADF test should also include the Perron test. – Michael Aug 23 '20 at 22:57

-

Is this the Phillip Pheron unit root test (sometimes people have more than one test associated with them). The test for this I know of is Lee and Strazicich - well I have heard of it I am still not sure if R runs it :) – user54285 Aug 23 '20 at 23:08

-

@user54285 I believe this particular test is just called the Perron test but you should check documentation for a specific software. It's also pretty easy to compute the Perron statistic yourself---mechanically it's a slight modification of the ADF regression. So you can always compute the statistic yourself and use Perron's tables for critical value. For structural breaks, there's also the more recent Zivot-Andrews test (and others). – Michael Aug 23 '20 at 23:13

-

I found 4 methods in my research, but am going to stick with Zivot Andrews assuming R does this. Thanks again Michael. This is one of my greatest concerns about statistics. The importance of structural breaks in unit roots is obviously important, but I never heard about until a week ago despite a lot of reading on unit root test. Given that there are limits on time to do reading I sometimes wonder if its possible to ever run it correctly except for a handful of experts maybe. – user54285 Aug 23 '20 at 23:37

-

@user54285 Empirical knowledge of your particular context helps a lot and should probably enter into your prior as much as purely statistical considerations. In Nelson-Plosser's case, Perron pointed out that the 1970 oil shock is a possible structural break and used his test to provide statistical evidence. Zivot-Andrews doesn't assume a known break date---a possible trade-off there is statistical vs. empirical evidence (if you're lucky, they point in the same direction). – Michael Aug 24 '20 at 00:07

-

I was reminded by the software that there is not supposed to be long discussions in comments. If you ever want to talk about time series in chat, assuming I am allowed there I would be delighted to continue this conversation. – user54285 Aug 24 '20 at 00:13

-

HI MIchael: Thanks for your edited answer and again my apologies. I'm definitely confused now ( given what Professor Hamilton said ) but, based on what you said in your reply, it sounds like the 1979 paper was assuming normality of the error term and then the later papers made improvements to what they did and led to the less restrictive assumptions ? Thanks for clarifying and all of the references. When I get some time, I'll check them out. – mlofton Aug 24 '20 at 12:15

-

@mlofton "...the [Dickey-Fuller JASA] 1979 paper was assuming normality of the error term and then the later papers made improvements...and led to the less restrictive assumptions?" Yes, significant improvements. Phillips et al noted that the asymptotic distribution is given by certain continuous functionals of the Brownian sample paths, whereas DF 1979 assumed i.i.d. normal error and even under this restrictive assumption only showed the existence of a limit distribution without any closed form characterization, and did the rest by simulation. – Michael Aug 24 '20 at 17:13

-

Michael: Gotcha. Thanks for clarification. So, when one looks up the DF tables in say the Hamilton text, are they looking up the ones that DF 1979 constructed ? On the other hand, when one refers to "DF tables" in such a text, maybe they are updated ones that are no longer based on DF 1979 ? Either way, I understand the issue a lot more clearly now. Thanks a lot and I'm sorry for confusing the issue and causing so much of your time to be spent explaining it. Maybe you can tell that I'm old since I'm stuck on DF 1979. – mlofton Aug 25 '20 at 18:29

-

@mlofton The difference between those tables would be numerical, the distribution being simulated is the same. Consider the following analogous situation. You proved that, when data is i.i.d. normal, the t-stat has a limit distribution---just existence, no characterization of any kind. This existence then implies it makes sense to simulate, which you do and provide a table. This is DF. Now someone else comes along and points out the connection to CLT and drops the i.i.d. normality assumption completely---CLT holds under very general conditions. – Michael Aug 26 '20 at 17:53

-

@mlofton (Cont'd) So they give a characterization of the limit distribution---in this case N(0,1). That's Phillips et al. The difference in the FCLT case is that even after your characterize it, the explicit density is not known and you still have to simulate. But the point is that the i.i.d. normal assumption is dropped completely. – Michael Aug 26 '20 at 17:55

-

1Michael: That was a beautiful analogy. I get it now. Thanks so much and apologies. Also, my apologies to Peter Phillips also since what he did was a bigger deal than I ever realized. For those reading this, Hamilton's chapter on this topic is also quite good, although he doesn't explain the relation between Dickey Fuller, 1979 and Phillips results like Michael did. – mlofton Aug 27 '20 at 13:32

-

@mlofton Incidentally, I believe that the sufficient condition for FCLT stated in Hamilton is that the innovations $u_t$ is of the MA form $u_t = \sum \theta_h \epsilon_{t-h}$ where $\epsilon_t$ is i.i.d. is finite fourth moments and $\theta_h \in l^2$. No proof is given and I don't believe it actually suffices. The i.i.d. with finite fourth moment assumption would imply $\sup_t E[u_t^2] < \infty$---which does not satisfy McLeish's UI condition, much less Phillips and Perron's more restrictive Condition (ii). – Michael Aug 27 '20 at 23:29

-

Similarly, the relationship between a MA process and strong mixing-type condition such as Phillips and Perron's Condition (vi) is not automatic. E.g. if $\epsilon_t$ has Bernoulli distribution, then $u_t$ would not be strong mixing. Usually, to show an ARMA process satisfy a mixing condition, one needs assumptions on the marginal distribution, in addition to other properties. (C.f. https://stats.stackexchange.com/questions/484767/stationarity-and-ergodicity-links/484844#484844) – Michael Aug 27 '20 at 23:31

-

Thaks Michael. I'll have to look at what you said more carefully when I have more time. At the moment, it's not clear but it has some potential for becoming clearer with effort on my part. Thanks. – mlofton Aug 29 '20 at 00:02