Reinforcement learning is fairly new to me, but hopefully this is helpful. Firstly, some general remarks.

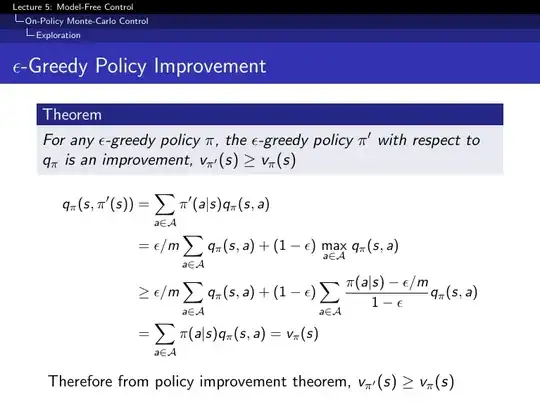

The theorem states that the new policy will not be worse than the previous policy, not that it has to be better.

There is a slight difference in the formulation of the theorem between the book and the slides. The book specifies the first policy to be $\epsilon$-soft, whereas the slides specify $\epsilon$-greedy.

An $\epsilon$-greedy policy is $\epsilon$-greedy with respect to an action-value function, it's useful to think about which action-value function a policy is greedy/$\epsilon$-greedy with respect to.

The $\epsilon$-Greedy policy improvement theorem is the stochastic extension of the policy improvement theorem discussed earlier in Sutton (section 4.2) and in David Silver's lecture. The motivation for the theorem is that we want to find a way of improving policies while ensuring that we explore the environment. Deterministic policies are no good now as there may be state-action pairs that are never encountered under them. One way to ensure exploration is via so called $\epsilon$-soft policies. A policy is $\epsilon$-soft if all actions $a$ have probability of being taken $p(a) \ge \epsilon/N_{a}$, where $\epsilon > 0$ and $N_{a}$ is the number of possible actions.

In both cases of policy improvement the aim is to find a policy $\pi'$ that satisfies $q(s,\pi'(s)) \ge q(s,\pi(s)) = v^{\pi}(s)$, as this implies that $v^{\pi'}(s) \ge v^{\pi}(s)$ and thus we have a better policy. In the deterministic case it was straightforward to arrive at such a policy by construction so it was not necessary to demonstrate that the condition was satisfied. In the stochastic case it is less obvious, and the solution to constructing such a new

policy needs to be shown to satisfy the required condition.

In the deterministic limit ($\epsilon = 0$) of a full greedy policy, we are just back at the case of deterministic policy improvement. Our new policy $\pi'$ is the greedy policy with respect to the action value function of $\pi$, this is exactly the policy constructed to meet the condition in the deterministic case. You're right to say the theorem doesn't say much in this case, it says exactly as much as we already know from the deterministic case, which didn't require proof. We already know how things work in this case and we want to generalise to non-deterministic policies. Hopefully that resolves your second area of confusion.

How do we find a better stochastic policy?

The theorem (according to the book) tells us that given an $\epsilon$-soft policy, $\pi$, we can find a better policy, $\pi'$, by taking the $\epsilon$-greedy policy with respect to the action-value function of $\pi$. Note that $\pi'$ is $\epsilon$-greedy with respect to the action-value function of the previous policy $\pi$, not (in general) its own action-value function. Any policy that is $\epsilon$-greedy with respect to any action-value function is $\epsilon$-soft, i.e. the new policy is still $\epsilon$-soft. Hence we can find another new policy, $\pi''$, this time taking it to be $\epsilon$-greedy with respect to the action-value function of $\pi'$, and this will be better again. We are aiming at the optimal $\epsilon$-soft policy. When we arrive at a policy that is $\epsilon$-greedy with respect to its own action-value function then we are done.

Clearly if a policy is e-greedy, then it is $\epsilon$-soft, giving the formulation on the slide. I think the discussion of $\epsilon$-soft policies in the book helps to illuminate the theorem. Potentially they have avoided introducing $\epsilon$-soft in the slides to reduce the amount of new terminology being presented.

In the case that $\epsilon$ $\approx$ 1, remember that the original policy is also $\epsilon$-greedy so will be almost random. In this case we're essentially saying that we can find an almost random policy that is (occasionally) greedy in a better way than a previous almost random policy. Specifically, we make it "greedy in a better way" by making it $\epsilon$-greedy with respect to the action-value function of the previous policy.