The first case mentioned in the question can be solved with a very simple method (not iterative, no initial guess) thanks to the piecewise linear regression given page 12 of the paper : https://fr.scribd.com/document/380941024/Regression-par-morceaux-Piecewise-Regression-pdf

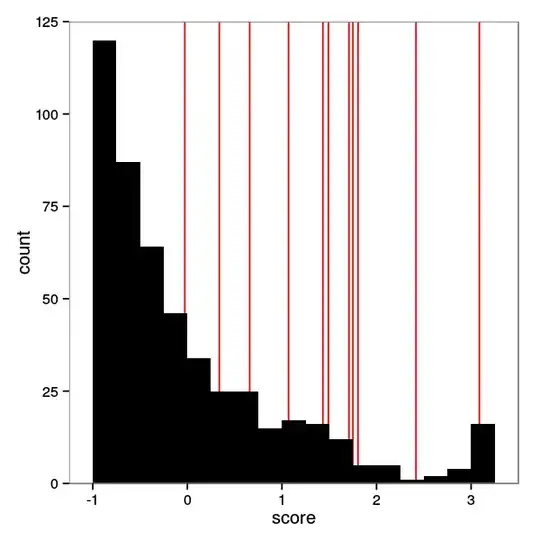

The result

$\quad\begin{cases}

y=p_1x+q_1 & x<a_1 \\

y=p_2x+q_2 & x>a_1

\end{cases}\quad$

appears on the next figure :

The second case, so called "Branching Stick regression" is substantially different because the intended function is multi-valuated on a range of $x$.

In fact, this case is a sub-case of the "degenerated conic regression" which is treated page 19 in another paper : https://fr.scribd.com/doc/14819165/Regressions-coniques-quadriques-circulaire-spherique .

The sought multi-valuated function must be of the second order at least, for example a conic section of equation :

$$a_{02}y^2+a_{20}x^2+a_{11}xy+a_{01}y+a_{10}x+1=0 \tag 1$$

A degenerate case is the case of two straight lines $y=p_1x+q_1$ and $y=p_2x+q_2$ , which equation is :

$$(p_1x+q_1-y)(p_2x+q_2-y)=0 \tag 2$$

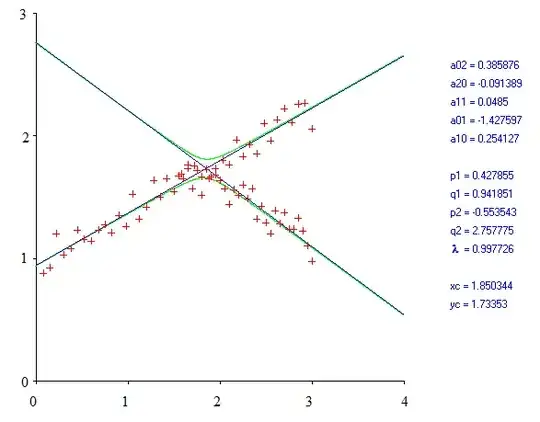

A linear regression gives approximate values of the coefficients $a_{02},a_{20},a_{11},a_{01},a_{10}$ .

As explained in the referenced paper, the equations $(1)$ and $(2)$ match if there is no scatter on data. In case of scattered data, the result of regression is an hyperbola equation $(1)$, close to the asymptotes equation $(2)$. The formulas to compute $p_1,q_1,p_2,q_2$ are provided in the referenced paper.

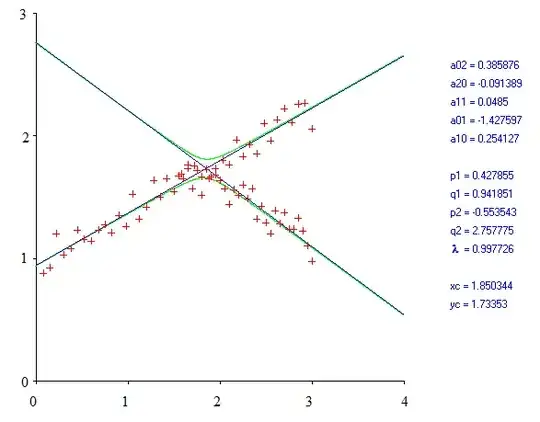

A numerical example is shown on the figure below. The hyperbola computed from the regression is drawn in green, the asymptotes in blue. The coordinates of the "Branching point" are $(x_c,y_c)$.

In the referenced paper, it is pointed out that the method of "degenerated conic regression" fails if the scatter of data is too large because the gap between the hyperbola and the asymptotes becomes too large.