HoraceT and CliffAB (sorry too long for comments) I’m afraid I have a lifetime of examples, which have also taught me that I need to be very careful with their explanation, if I wish to avoid offending people. So while I don’t want your indulgence, I do ask for your patience. Here goes:

To start with an extreme example, I once saw a proposed survey question that asked illiterate village farmers (South East Asia), to estimate their ‘economic rate of return’. Leaving the response options aside for now, we can hopefully all see that this a stupid thing to do, but consistently explaining why it is stupid is not so easy. Yes, we can simply say that it is stupid because the respondent will not understand the question and just dismiss it as a semantic issue. But this is really not good enough in a research context. The fact that this question was ever suggested implies that researchers have inherent variability on what they consider ‘stupid’. To address this more objectively, we must step back and transparently declare a relevant framework for decision making about such things. There are many such options, and I will use one that I sometimes find useful - but have no intent of defending here (I actively encourage anyone to think of others, as it means you are already starting down the road to better conceptualizations).

So, let’s transparently assume that we have two basic information types we can use in analyses: qualitative and quantitative. And that the two are related by a transformative process, such that all quantitative information started out as qualitative information but went through the following (oversimplified) steps:

- Convention setting (e.g. we all decided that [regardless of how we individually perceive it], that we will all call the colour of a daytime open sky “blue”.)

- Classification (e.g. we assess everything in a room by this convention and separate all items into ‘blue’ or ‘not blue’ categories)

- Count (we count/detect the ‘quantity’ of blue things in the room)

Note that (under this model) without step 1, there is no such thing as a quality and if you don’t start with step 1, you can never generate a meaningful quantity.

Once stated, this all looks very obvious, but it is such sets of first principles that (I find) are most commonly overlooked and therefore result in ‘Garbage-In’.

So the ‘stupidity’ in the example above becomes very clearly definable as a failure to set a common convention between the researcher and the respondents. Of course this is an extreme example, but much more subtle mistakes can be equally garbage generating. Another example I have seen is a survey of farmers in rural Somalia, that asked “How has climate change affected your livelihood?” Again putting response options aside for the moment, I would suggest that even asking this of farmers in the Mid-West of the United States would constitute a serious failure to use a common convention between researcher and respondent (i.e. as to what is being measured as ‘climate change’).

Now let’s move on to response options. By allowing respondents to self-code responses from a set of multiple choice options or similar construct you are pushing this ‘convention’ issue into this aspect of questioning as well. This may be fine if we all stick to effectively ‘universal’ conventions in response categories (e.g. question: what town do you live in? response categories: list of all towns in research area [plus ‘not in this area’]).

However, many researchers actually seem to take pride in the subtle nuancing of their questions and response categories to meet their needs.

In the same survey that the ‘rate of economic return’ question appeared, the researcher also asked the respondents (poor villagers), to provide which economic sector they contributed to: with response categories of ‘production’, ‘service’, ‘manufacturing’ and ‘marketing’. Again a qualitative convention issue obviously arises here. However, because he made the responses mutually exclusive, such that respondents could only choose one option (because “it is easier to feed into SPSS that way”), and village farmers routinely produce crops, sell their labour, manufacture handicrafts and take everything to local markets themselves, this particular researcher did not just have a convention issue with his respondents, he had one with reality itself.

This is why old bores like myself will always recommend the more work intensive approach of applying coding to data post-collection - as at least you can adequately train coders in researcher-held conventions (and note that trying to impart such conventions to respondents in ‘survey instructions’ is a mug’s game –just trust me on this one for now).

Also note also that if you accept the above ‘information model’ (which, again, I am not claiming you have to), it also shows why quasi-ordinal response scales have a bad reputation. It is not just the basic maths issues under the Steven’s convention (i.e. you need to define a meaningful origin even for ordinals, you can’t add and average them, etc. etc.), it is also that they have often never been through any transparently declared and logically consistent transformative process that would amount to ‘quantification’ (i.e. an extended version of the model used above that also encompasses generation of ‘ordinal quantities’ [-this is not hard to do]). Anyway, if it does not satisfy the requirements of being either qualitative or quantitative information, then the researcher is actually claiming to have discovered a new type of information outside the framework, and the onus is therefore on them to explain its fundamental conceptual basis fully (i.e. transparently define a new framework).

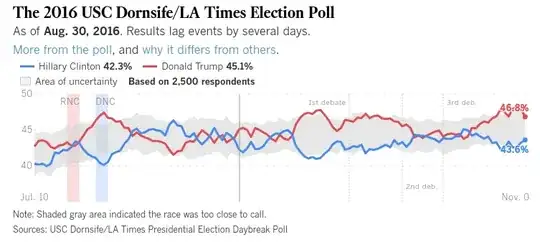

Finally let’s look at sampling issues (and I think this aligns with some of the other answers already here). For example, if a researcher wants to apply a convention of what constitutes a ‘liberal’ voter, they need to be sure that the demographic information they use to choose their sampling regime is consistent with this convention. This level is usually the easiest to identify and deal with as it is largely within researcher control and is most often the type of assumed qualitative convention that is transparently declared in research. This is also why it is the level usually discussed or critiqued, while the more fundamental issues go unaddressed.

So while pollers stick to questions like ‘who do you plan to vote for at this point in time?’, we are probably still ok, but many of them want to get much ‘fancier’ than this…