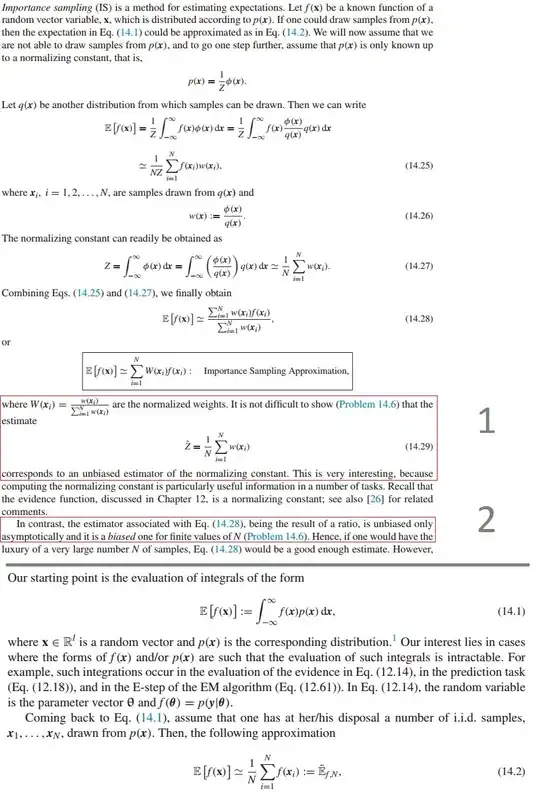

$\newcommand{\E}{\mathbb{E}}$I'm reading a book on machine learning and sampling methods and I want to know why the estimator of the normalizing constant is unbiased, but the estimator of $\E\left[f(x)\right]$ is biased. Please see the image below:

My question is, how we can prove that importance sampling,

- leads to an unbiased estimator of the normalizing constant or $\E\left[\hat{Z}\right] = Z $;

- and the estimator of $\E\left[f(x)\right]$ for a function $f(.)$ is a biased.

Edit:

Suppose $Z$ is the normalizing constant of the desired distribution $p(x) = \phi(x)/Z$ ($p(x)$ is only known up to the normalizing constant $Z$). We have

$$I = \E\left[{\bf{f}}({\bf{x}})\right] = \frac{1}{Z}\int {{\bf{f}}({\bf{x}})\varphi ({\bf{x}})} d{\bf{x}} = \frac{1}{Z}\int {{\bf{f}}({\bf{x}})\frac{{\varphi ({\bf{x}})}}{{q({\bf{x}})}}q({\bf{x}})} d{\bf{x}}$$

and its approximation

$$I_N = \frac{{\frac{1}{N}\sum\nolimits_{i = 1}^N {{\bf{f}}({{\bf{x}}^i})w({{\bf{x}}^i})} }}{{\frac{1}{N}\sum\nolimits_{j = 1}^N {w({{\bf{x}}^j})} }} = \sum\nolimits_{i = 1}^N {{\bf{f}}({{\bf{x}}^i})W({{\bf{x}}^i})} $$

as well as

$$\hat Z = \frac{1}{N}\sum\nolimits_{i = 1}^N {w({{\bf{x}}^i})} $$

My questions are:

1- Why the author takes $Z = \int {\varphi ({\bf{x}})} d{\bf{x}}$?

2- I'm not able to prove mathematically that $\E\left[\hat{Z}\right] =Z $ ,

3- and I want to know how one can prove that $\hat{I_N}$ is biased for finite values of N?