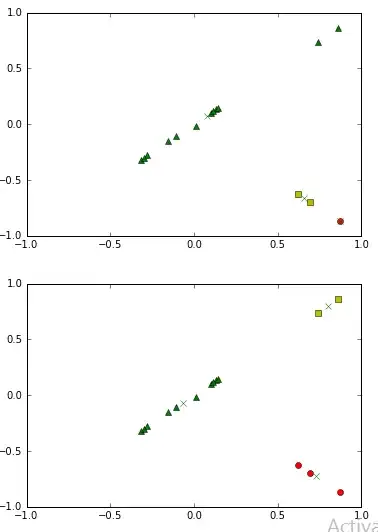

I have small dataset of 15 points. K-means clustering 2 time gives me this result.

Besides the random initializing the centroids, what could a reason for this bizarre graph(1st one) that it has given? I am yet to understand this simple algorithm to full extent.