first, fixing the definition of the problem, the index is $m$ instead of $u$, to make simpler I will use only the index $i$ and $j$.

We want to prove that

$\operatorname{Var}\left(\frac{X_1+X_2+...+X_n}{n}\right) = \dfrac{\gamma(0)}{n} + \dfrac{2}{n} \sum_{i=1}^{n-1} \left(1−\dfrac{i}{n}\right) \gamma(i).$

The begin is correct,

$$\operatorname{Var}(\bar{X}) = \dfrac{1}{n^2} \sum_{i=1}^n\sum_{j=1}^n \operatorname{Cov}(X_i,X_j)$$

We can notice that $\operatorname{Cov}(X_i,X_j) = \operatorname{Cov}(X_j,X_i)$ and, from our assumptions about the problem, that $\operatorname{Cov}(X_i,X_i+h) = \operatorname{Cov}(X_i,X_i-h) = \gamma(h)$ for any $i$ and $h$.

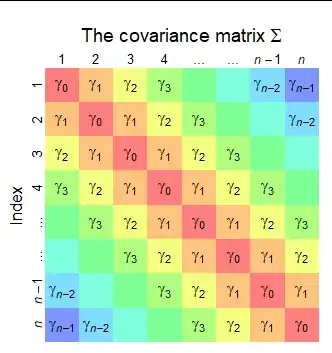

We can visualize the sum of covariances in $i$ and $j$ as follows

$$\left| \begin{array}{ccccc}

\operatorname{Cov}(1,1) & \operatorname{Cov}(1,2) & \cdots & \operatorname{Cov}(1,n-1) & \operatorname{Cov}(1,n)\\

\operatorname{Cov}(2,1) & \operatorname{Cov}(2,2) & \cdots & \operatorname{Cov}(2,n-1) & Cov(2,n)\\

\vdots & \vdots & \ddots & \vdots & \vdots\\

\operatorname{Cov}(n-1,1)& \operatorname{Cov}(1,2) & \cdots & \operatorname{Cov}(n-1,n-1) &\operatorname{Cov}(n-1,n)\\

\operatorname{Cov}(n,1) & \operatorname{Cov}(n,2) & \cdots & \operatorname{Cov}(n,n-1) &\operatorname{Cov}(n,n) \end{array} \right|$$

What is equal to

$$\left| \begin{array}{ccccc}

\gamma(0) & \gamma(1) & \cdots & \gamma(n-1)\\

\gamma(1) & \gamma(0) & \cdots & \gamma(n-2)\\

\vdots & \vdots & \ddots & \vdots\\

\gamma(n-1)& \gamma(n-2) & \cdots & \gamma(0)\\ \end{array} \right|$$

To sum all the elements we can first sum the main diagonal, and as it is symmetric sum twice the other diagonals

$$\sum_{i=1}^n\sum_{j=1}^n \operatorname{Cov}(X_i,X_j) = n \gamma(0) + 2\sum_{i=1}^{n-1}(n-i)\gamma(i)$$.

Back to the main equation

$$\operatorname{Var}\left(\frac{X_1+X_2+...+X_n}{n}\right) = \dfrac{\gamma(0)}{n}+\dfrac{2}{n^2}\sum_{i=1}^{n-1}(n-i)\gamma(i) = \dfrac{\gamma(0)}{n}+\dfrac{2}{n}\sum_{i=1}^{n-1}(1-\dfrac{i}{n})\gamma(i).$$