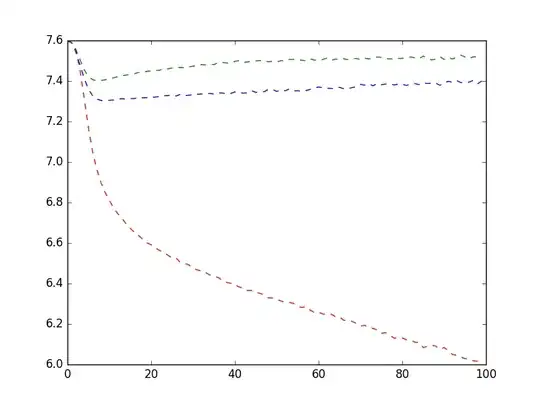

I designed my own neural network for solving the problem of text summarization. The number of documents in my training dataset is big (more than 100,000 documents) so it is hard to check it on the whole data. In order to verify that my model is good, I train it on a very small dataset (100 documents) in about 100 epochs to see how it behaves. I split this small dataset into 3 sets (6/2/2): training, validation and test. Here is the chart of the losses (red line is training loss, blue line is validation loss and green line is test loss)

Is my evaluation on this small dataset good enough to tell whether my model is performing well?

Does the above chart shows that my model is getting overfitting easily?

Do you have any recommendation for quickly evaluating a new proposed model and avoid overfitting?

Update:

I trained my network again on a bigger data set (4000 documents) and I got the following chart. It does seem my model is not well-designed.