Using Bayes theorem is not the same as using Bayesian statistics. You are mixing two different things.

If you knew what is the conditional probability of person's gender given his luck in lottery $\Pr(\text{gender} \mid \text{win})$ and the unconditional probability distribution of winning $\Pr(\text{win})$, then you could apply Bayes theorem to compute $\Pr(\text{win} \mid \text{gender})$. Notice that I did not use anywhere here terms such as prior, likelihood, or posterior, since they have nothing to do with such problems. (You could use naive Bayes classifier for such problems, but first is is not Bayesian since it does not use priors, and second you have insufficient data for it.)

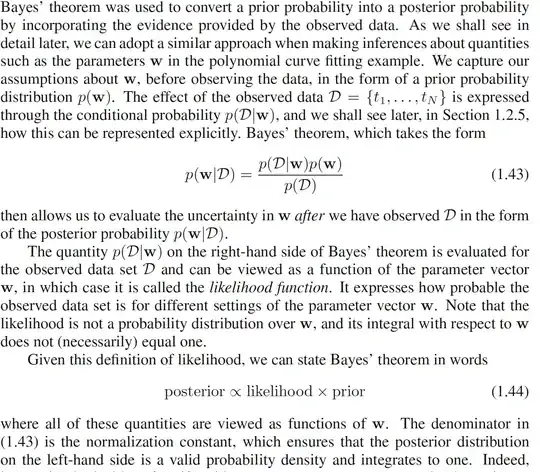

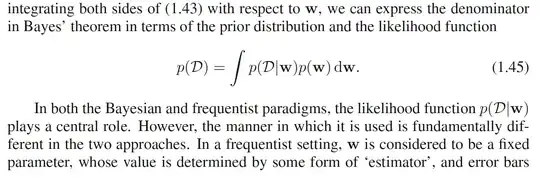

As your quote mentioned, in Bayesian approach we have prior, likelihood and posterior. Likelihood is a conditional distribution of data given some parameter. Prior is distribution of this parameter that you assume a priori before seeing the data. Posterior is the estimate given the data you have and your prior.

To give concrete example illustrating it, let's assume that you have data about some coin since you threw it once and observed a head, let's call it $X$. Obviously, $X$ follows Bernoulli distribution parametrized by some parameter $p$ that is unknown and we want to estimate it. We do not know what is $p$, but we have likelihood function $f(X \mid p)$, that is probability mass function of Bernoulli distribution over $X$ parametrized by $p$. To learn about $p$ Bayesian way, we assume prior for $p$. Since we have no clue what $p$ could be, we can decide to use weekly informative "uniform" Beta(1,1) prior. So out model becomes

$$ X \sim \mathrm{Bernoulli}(p) \\

p \sim \mathrm{Beta}(\alpha, \beta) $$

where $\alpha = \beta = 1$ are parameters of beta distribution. Since beta is conjugate prior for Bernoulli distribution, we can easily compute the posterior distribution of $p$

$$ p \sim \mathrm{Beta}(\alpha + 1, \beta) $$

and it's expected value

$$ E(p \mid X) - \frac{\alpha + 1}{\alpha + \beta + 1} = 0.66 $$

so given the data we have and assuming Beta(1,1) prior, expected value of $p$ is $0.66$.