I'm reading Introduction to Statistical Learning. The relevant part is referenced here: Proof/Derivation of Residual Sum of Squares (Based on Introduction to Statistical Learning).

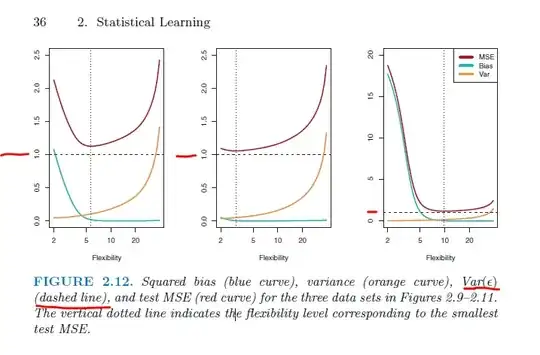

When the author shows graphs that illustrate "Bias vs Variance Tradeoff" (as in Figure 2.12), the ${\rm Var}(\varepsilon)$ is always $1$ (note the dashed lines in the figures):

The conditions of $\varepsilon$ are clarified elsewhere, as on page 16:

$\varepsilon$ is a random error term, which is independent of $X$ and has mean zero.

... and there is some explanation about going from "random error term" to "irreducible error":

However, even if it were possible to form a perfect estimate for $f$, so that our estimated response took the form $\hat{Y} = f(X)$, our prediction would still have some error in it! This is because $Y$ is also a function of $\varepsilon$, which, by definition, cannot be predicted using $X$. Therefore, variability associated with $\varepsilon$ also affects the accuracy of our predictions.

But I don't see anywhere in the other SO questions, nor in the book: why is $Var(\varepsilon)$ always at 1?

- Is it because the "mean is zero"? I don't think so; I could describe a dataset with mean of zero but a variance of $\ne 1$.

- Is it because, as described elsewhere, the "the error term $\varepsilon$ is normally distributed"? I don't know enough about the normal distribution; is the variance of a normal distribution is always equal to some value?

EDIT

In looking for help in Wikipedia's MSE article, I expected to find a consistent formula with the "three fundamental quantities" (i.e., the variance, the bias, and the variance of the error terms), but I didn't. Can someone tell me why the Wikipedia doesn't list the variance of error terms:

$$\operatorname{MSE}(\hat{\theta})=\operatorname{Var}(\hat{\theta})+ \left(\operatorname{Bias}(\hat{\theta},\theta)\right)^2$$