I have a unbalanced data set and use Cohen's kappa and AUC as performance measure.

Without down sampling the Kappa value is around 0.85, with random down sampling it is 0.95. and with a house-made focused down sampling it is approx 0.75. Which data would you use to train the classifier? The AUC for the three cases are 0.998 without downsampling, 0.998 with random downsampling and 0.921 with house-made downsampling

I'm suspicious that the random down sampling increases the kappa value.

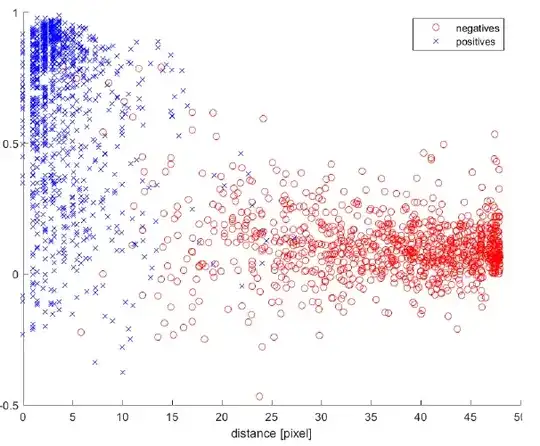

The full data set looks like this

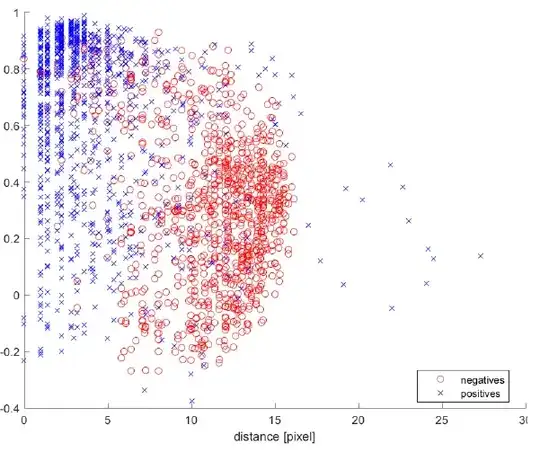

When I randomly downsampl it looks like this

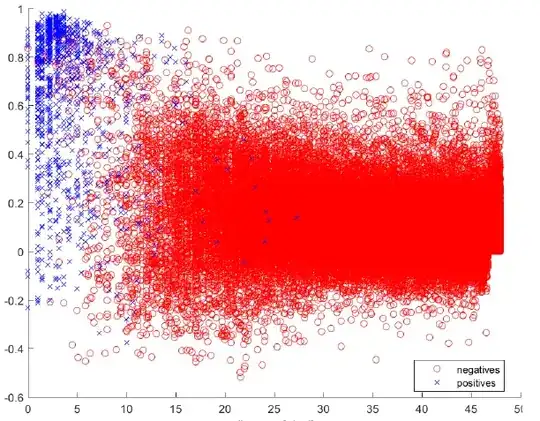

And with a house made sort of focused downsampling it looks like this