It is possible and sometimes appropriate to use a subset of the principal components as explanatory variables in a linear model rather than the the original variables. The resulting coefficients then need to be be back-transformed to apply to the original variables. The results are biased but may be superior to more straightforward techniques.

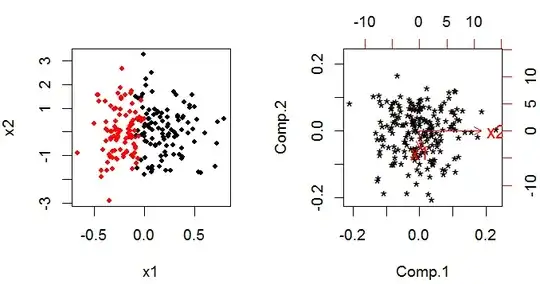

PCA delivers a set of principal components that are linear combinations of the original variables. If you have $k$ original variables you still have $k$ principal components in the end, but they have been rotated through $k$-dimensional space so they are orthogonal to (ie uncorrelated with) eachother (this is easiest to think through with just two variables).

The trick to using PCA results in a linear model is that you make a decision to eliminate a certain number of the principal components. This decision is based on similar criteria to the "usual" black-art variable selection processes for building models.

The method is used to deal with multi-collinearity. It is reasonably common in linear regression with a Normal response and identity link function from the linear predictor to the response; but less common with a generalized linear model. There is at least one article on the issues on the web.

I'm not aware of any user-friendly software implementations. It would be fairly straightforward to do the PCA and use the resulting principal components as your explanatory variables in a generalized linear model; and then to translate back to the original scale. Estimating the distribution (variance, bias and shape) of your estimators having done this would be tricky however; the standard output from your generalized linear model will be wrong because it assumes you are dealing with original observations. You could build a bootstrap around the whole procedure (PCA and glm combined), which would be feasible in either R or SAS.