Since I run into complete separation with logistic regression I try to run a penalized logistic regression for a binomial response variable.

It doesn't seem to work for my data. In an example that works a plot of the deviance vs. the lambda value looks like this:

The green point shows the point of minimum deviance, and the blue one shows the minimum deviance plus no more than one standard deviation.

In this case I can choose one of the two points. However in my data set it looks like this:

1) What could be the reason that shrinkage doesn't work? Apparently the model suggests to use lambda = 0. Unfortunately I have no clue why this is the case. For the interested reader I provide more data below:

Data used: my data is highly imbalanced, shows complete separation and has two predictors that are linear correlated. These two are the ones that lead to complete separation.

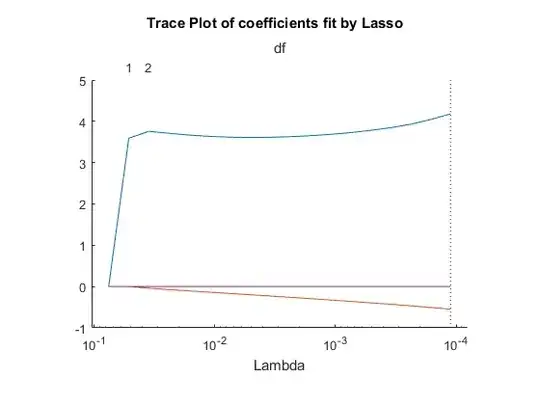

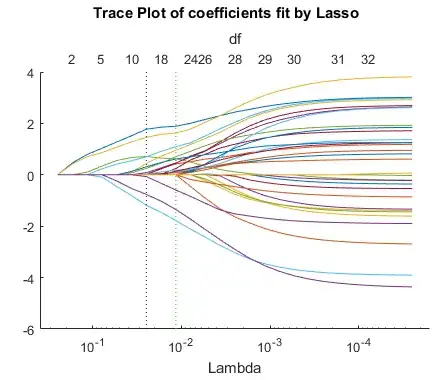

Another plot to analyse is to look at a trace plot below for an example where it works. The trace plot shows nonzero model coefficients as a function of the regularization parameter Lambda. Because there are 32 predictors and a linear model, there are 32 curves. As Lambda increases to the left, lassoglm sets various coefficients to zero, removing them from the model.

However again my data having four features (two correlated) doesn't yield a useful plot: