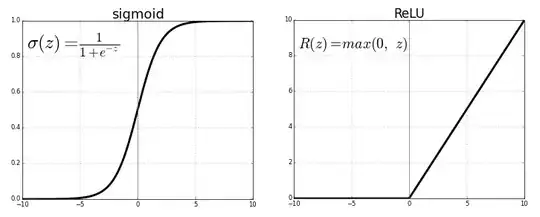

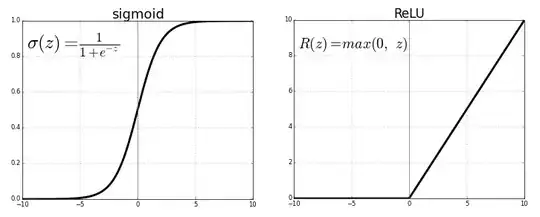

ReLU i.e. Rectified Linear Unit and tanh both are non-linear activation function applied to neural layer. Both have their own importance. It only depends on the problem in hand that we want to solve and the output that we want. Sometimes people prefer to use ReLU over tanh because ReLU involves less computation.

When I started studying Deep Learning, I had the question Why do we not just use linear activation function instead of non-linear? Answer is output will be just linear combination of input and hidden layer will have no effect and so hidden layer will not be able to learn important feature.

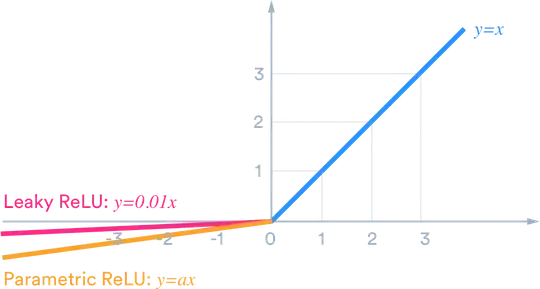

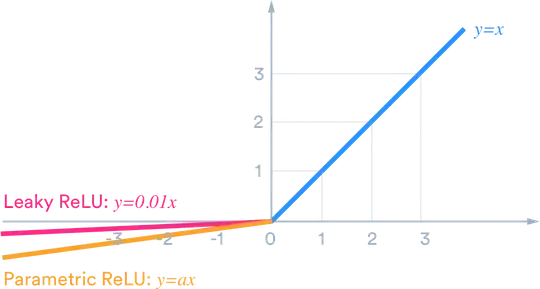

For example, if we want the output to lie within (-1,1) then we need tanh. If we need output between (0,1) then use sigmoid function . In case of ReLU it will give max{0,x}.There are many other activation functions like leaky ReLU.

Now in order to choose appropriate activation function for our purpose to give better result it is just a matter of experiment and practice which is known as tuning in data science world.

In your case, you may need to tune your parameter which is known as parameter tuning like number of neurons in hidden layers, number of layers etc.

Does ReLU layer work well for a shallow network?

Yes,of course ReLU layer work well for a shallow network.