I've recently reviewed some old papers by Nancy Reid, Barndorff-Nielsen, Richard Cox and, yes, a little Ronald Fisher on the concept of "conditional inference" in the frequentist paradigm, which appears to mean that inferences are based considering only the "relevant subset" of the sample space, not the entire sample space.

As a key example, it is known that the confidence intervals based on the t-statistic can be improved (Goutis & Casella, 1992) if you also consider the sample's coefficient of variation (referred to as an ancillary statistic).

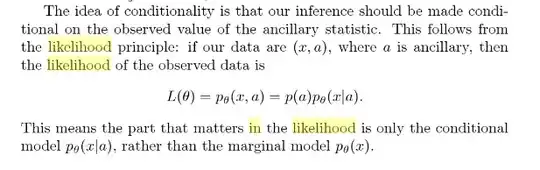

As someone who regularly uses likelihood-based-inference, I have assumed that when I form an asymptotic $\alpha$%-confidence interval, I am performing (approximate) conditional inference, since the likelihood is conditional on the observed sample.

My question is that, apart from conditional logistic regression, I have not seen much use of the idea of conditioning on ancillary statistics prior to inference. Is this type of inference restricted to exponential families, or is it going by another name nowadays, so that it only appears to be limited.

I found a more recent article (Spanos, 2011) that seems to cast serious doubt about the approach taken by conditional inference (i.e., ancillarity). Instead, it proposes the very sensible, and less mathematically convoluted suggestion that parametric inference in "irregular" cases (where the support of the distribution is determined by the parameters) can be solved by truncating the usual, unconditional sampling distribution.

Fraser (2004) gave a nice defense of conditionality, but I am still left with the feeling that more than just a little luck and ingenuity are required to actually apply conditional inference to complex cases...certainly more complex than invoking the chi-squared approximation on the likelihood ratio statistic for "approximate" conditional inference.

Welsh (2011, p. 163) may have answered my question (3.9.5, 3.9.6).

They point out Basu's well-known result (Basu's theorem) that there can be more than one ancillary statistic, begging the question as to which "relevant subset" is most relevant. Even worse, they show two examples of where, even if you have a unique ancillary statistic, it does not eliminate the presence of other relevant subsets.

They go on to conclude that only Bayesian methods (or methods equivalent to them) can avoid this problem, allowing unproblematic conditional inference.

References:

- Goutis, Constantinos, and George Casella. "Increasing the confidence in Student's $t$ interval." The Annals of Statistics (1992): 1501-1513.

- Spanos, Aris. "Revisiting the Welch Uniform Model: A case for Conditional Inference?." Advances and Applications in Statistical Science 5 (2011): 33-52.

- Fraser, D. A. S. "Ancillaries and conditional inference." Statistical Science 19.2 (2004): 333-369.

- Welsh, Alan H. Aspects of statistical inference. Vol. 916. John Wiley & Sons, 2011.