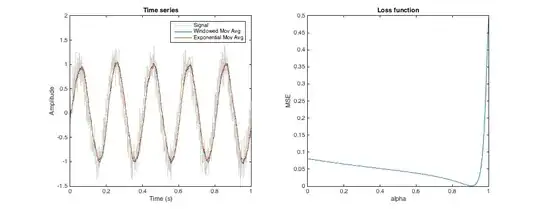

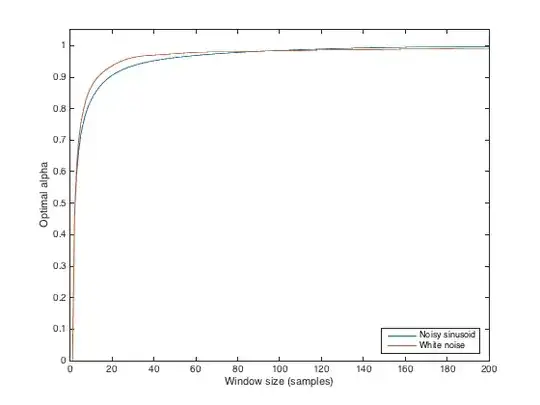

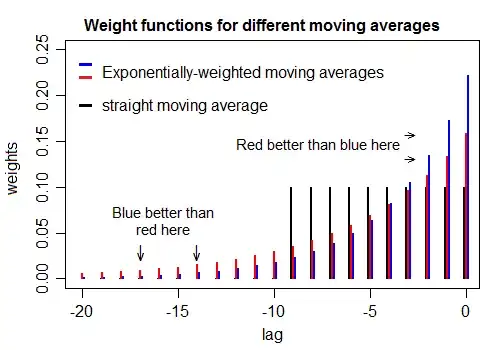

The alpha parameter of an exponential moving average defines the smoothing that the average applies to a time series. In a similar way, the window size of a moving window mean also defines the smoothing.

Is there some way to tune the alpha parameter such that the smoothing is approximately the same as that of a moving window mean of a given size? (Not looking for identical results, obviously, and offsets are OK). So, say tune alpha such that the resultant time series is as close as possible in shape to the result provided by a 3-month moving window?

edit: context: I'm trying to generate multiple proxy for soil moisture, from rainfall data, which abstractly represent different depths (which I'm assuming are related to long-term rainfall averages). A moving window allows me to calculate e.g total rainfall in the past 3 days, 3 months, or year, which might correspond to top few centimetres of soil, the top meter, and the extended soil column, respectively. However, a moving window requires data from the past, which isn't always available (e.g. at the start of a series). If an exponential average is used instead, then I only need to store one value for each average (the average from the previous time step), and this value can be initialised with the long-term mean.